5 Statistical theory for predictive soil mapping

Edited by: Hengl T., Heuvelink G.B.M and MacMillan R. A.

5.1 Aspects of spatial variability of soil variables

In this chapter we review the statistical theory for soil mapping. We focus on models considered most suitable for practical implementation and use with soil profile data and gridded covariates, and we provide the mathematical-statistical details of the selected models. We start by revisiting some basic statistical aspects of soil mapping, and conclude by illustrating a proposed framework for reproducible, semi-automated mapping of soil variables using simple, real-world examples.

The code and examples are provided only for illustration. More complex predictive modeling is described in chapter 6. To install and optimize all packages used in this chapter please refer to section 2.5.

5.1.1 Modelling soil variability

Soils vary spatially in a way that is often only partially understood. The main (deterministic) causes of soil spatial variation are the well-known causal factors — climate, organisms, relief, parent material and time — but how these factors jointly shape the soil over time is a very complex process that is (still) extremely difficult to model mechanistically. Moreover, mechanistic modelling approaches require large sets of input data that are realistically not available in practice. Some initial steps have been made, notably for mechanistic modelling of vertical soil variation (see e.g. Finke and Hutson (2008), Sommer, Gerke, and Deumlich (2008), Minasny, McBratney, and Salvador-Blanes (2008), and Vanwalleghem et al. (2010)), but existing approaches are still rudimentary and cannot be used for operational soil mapping. Mainstream soil mapping therefore takes an empirical approach in which the relationship between the soil variable of interest and causal factors (or their proxies) is modelled statistically, using various types of regression models. The explanatory variables used in regression are also known as covariates (a list of common covariates used in soil mapping is provided in chapter 4).

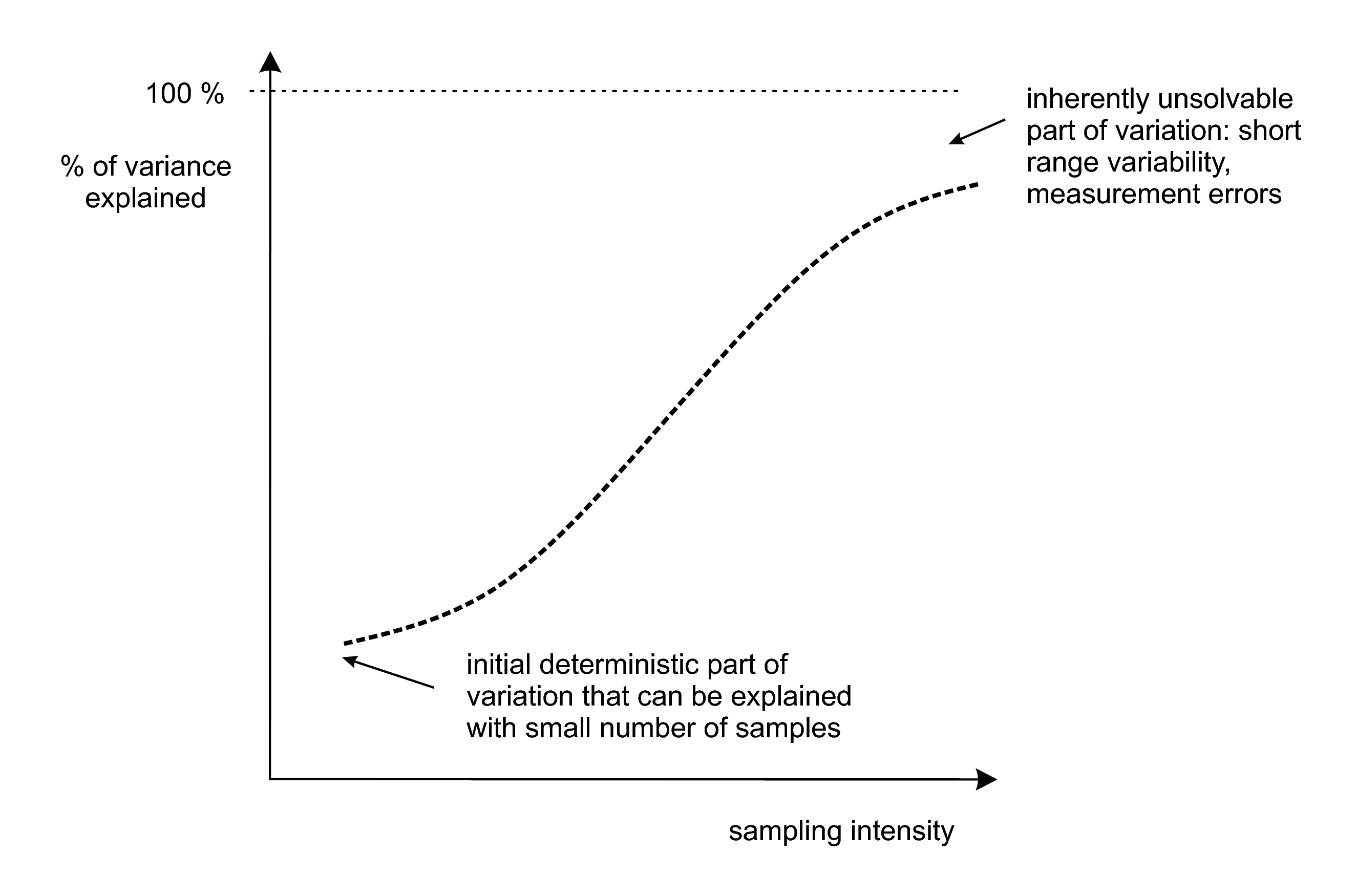

Regression models explain only part of the variation (i.e. variance) of the soil variable of interest, because:

The structure of the regression model does not represent the true mechanistic relationship between the soil and its causal factors.

The regression model includes only a few of the many causal factors that formed the soil.

The covariates used in regression are often only incomplete proxies of the true soil forming factors.

The covariates often contain measurement errors and/or are measured at a much coarser scale (i.e. support) than that of the soil that needs to be mapped.

As a result, soil spatial regression models will often display a substantial amount of residual variance, which may well be larger than the amount of variance explained by the regression itself. The residual variation can subsequently be analysed on spatial structure through a variogram analysis. If there is spatial structure, then kriging the residual and incorporating the result of this in mapping can improve the accuracy of soil predictions (Hengl, Heuvelink, and Rossiter 2007).

5.1.2 Universal model of soil variation

From a statistical point of view, it is convenient to distinguish between three major components of soil variation: (1) deterministic component (trend), (2) spatially correlated component and (3) pure noise. This is the basis of the universal model of soil variation (Burrough and McDonnell 1998; Webster and Oliver 2001, 133):

\[\begin{equation} Z({s}) = m({s}) + \varepsilon '({s}) + \varepsilon ''({s}) \tag{5.1} \end{equation}\]where \(s\) is two-dimensional location, \(m({s})\) is the deterministic component, \(\varepsilon '({s})\) is the spatially correlated stochastic component and \(\varepsilon ''({s})\) is the pure noise (micro-scale variation and measurement error). This model was probably first introduced by Matheron (1969), and has been used as a general framework for spatial prediction of quantities in a variety of environmental research disciplines.

The universal model of soil variation assumes that there are three major components of soil variation: (1) the deterministic component (function of covariates), (2) spatially correlated component (treated as stochastic) and (3) pure noise.

The universal model of soil variation model (Eq.(5.1)) can be further generalised to three-dimensional space and the spatio-temporal domain (3D+T) by letting the variables also depend on depth and time:

\[\begin{equation} Z({s}, d, t) = m({s}, d, t) + \varepsilon '({s}, d, t) + \varepsilon ''({s}, d, t) \tag{5.2} \end{equation}\]where \(d\) is depth expressed in meters downward from the land surface and \(t\) is time. The deterministic component \(m\) may be further decomposed into parts that are purely spatial, purely temporal, purely depth-related or mixtures of all three. Space-time statistical soil models are discussed by Grunwald (2005b), but this area of soil mapping is still rather experimental.

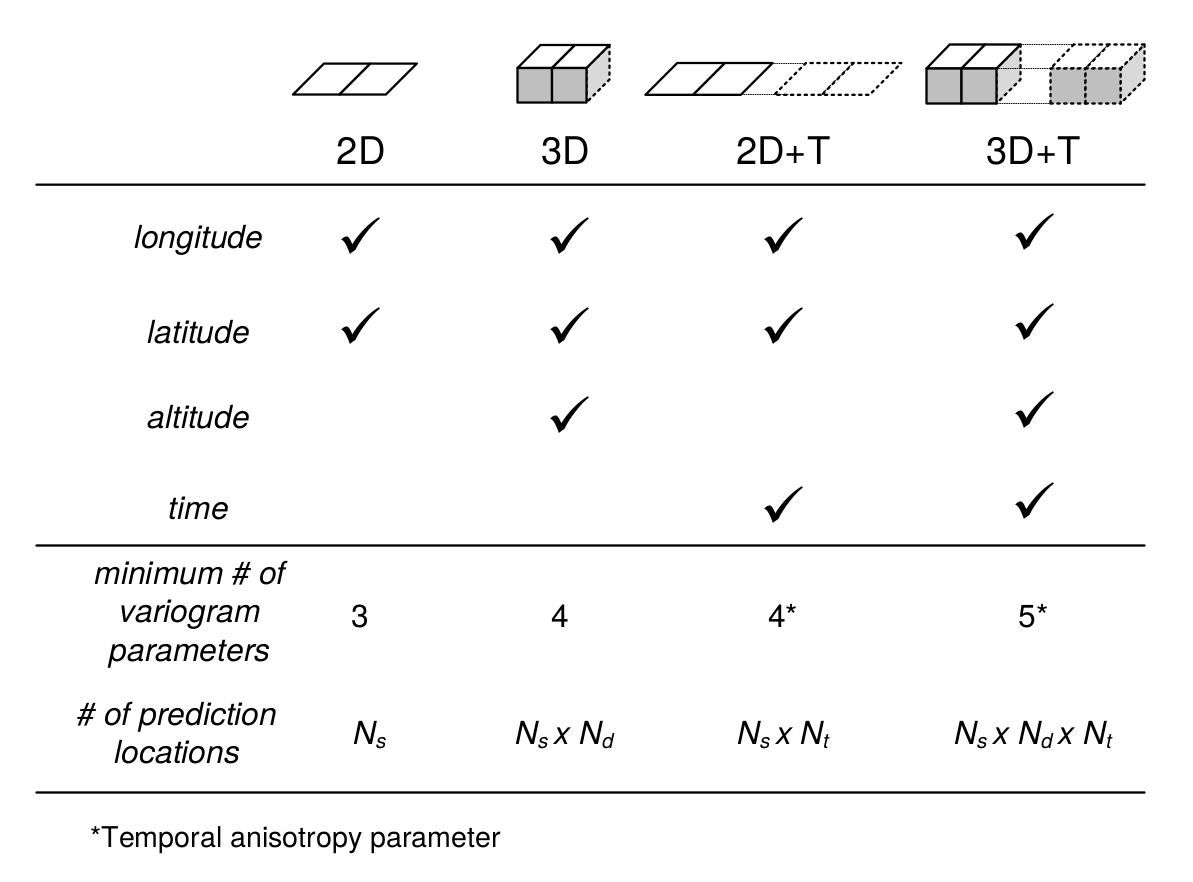

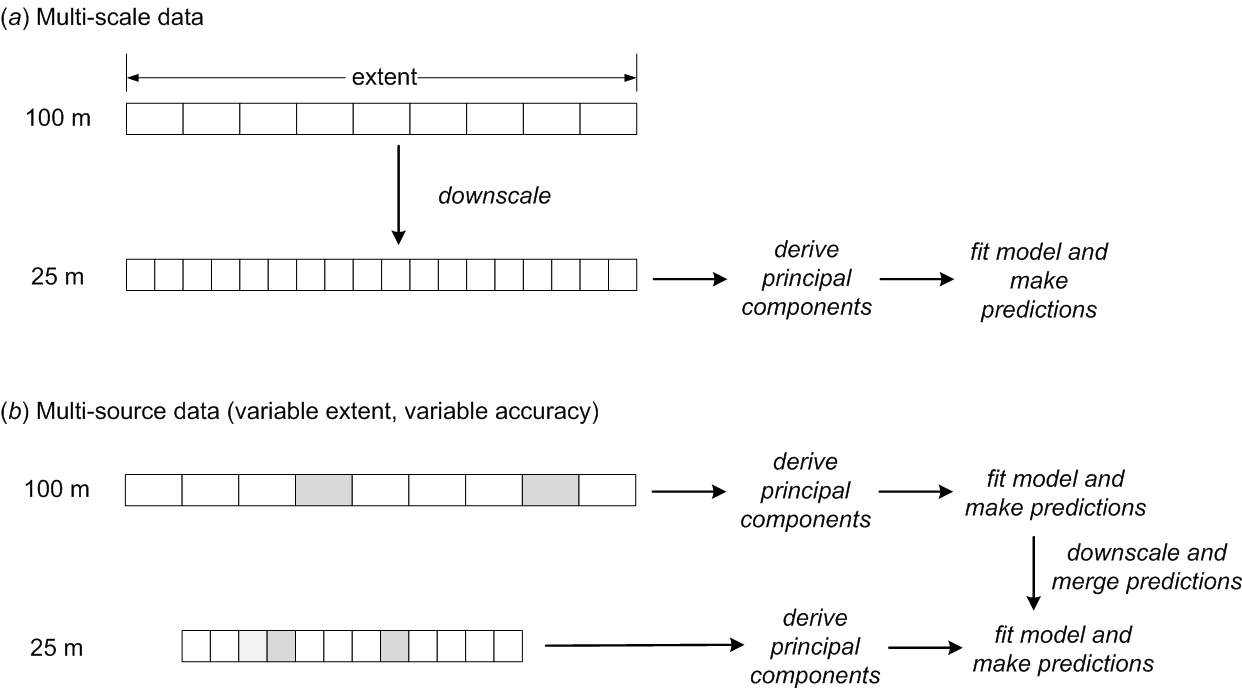

In this chapter, we mainly focus on purely 2D models but also present some theory for 3D models, while 2D+T and 3D+T models of soil variation are significantly more complex (Fig. 5.1).

Figure 5.1: Number of variogram parameters assuming an exponential model, minimum number of samples and corresponding increase in number of prediction locations for 2D, 3D, 2D+T and 3D+T models of soil variation. Here “altitude” refers to vertical distance from the land surface, which is in case of soil mapping often expressed as negative vertical distance from the land surface.

One of the reasons why 2D+T and 3D+T models of soil variations are rare is because there are very few point data sets that satisfy the requirements for analysis. One national soil data set that could be analyzed using space-time geostatistics is, for example, the Swiss soil-monitoring network (NABO) data set (Desaules, Ammann, and Schwab 2010), but even this data set does not contain complete profile descriptions following international standards. At regional and global scales it would be even more difficult to find enough data to fit space-time models (and to fit 3D+T variogram models could be even more difficult). For catchments and plots, space-time datasets of soil moisture have been recorded and used in space-time geostatistical modelling (see e.g. Snepvangers, Heuvelink, and Huisman (2003) and Jost, Heuvelink, and Papritz (2005)).

Statistical modelling of the spatial distribution of soils requires field observations because most statistical methods are data-driven. The minimum recommended number of points required to fit 2D geostatistical models, for example, is in the range 50–100 points, but this number increases with any increase in spatial or temporal dimension (Fig. 5.1). The Cookfarm data set for example contains hundreds of thousands of observations, although the study area is relatively small and there are only ca. 50 station locations (Gasch et al. 2015).

The deterministic and stochastic components of soil spatial variation are separately described in more detail in subsequent sections, but before we do this, we first address soil vertical variability and how it can be modelled statistically.

5.1.3 Modelling the variation of soil with depth

Soil properties vary with depth, in some cases much more than in the horizontal direction. There is an increasing awareness that the vertical dimension is important and needs to be incorporated in soil mapping. For example, many spatial prediction models are built using ambiguous vertical reference frames such as predicted soil property for “top-soil” or “A-horizon”. Top-soil can refer to different depths / thicknesses and so can the A-horizon range from a few centimeters to over one meter. Hence before fitting a 2D spatial model to soil profile data, it is a good idea to standardize values to standard depths, otherwise soil observation depth becomes an additional source of uncertainty. For example soil organic carbon content is strongly controlled by soil depth, so combining values from two A horizons one thick and the other thin, would increase the complexity of 2D soil mapping because a fraction of the variance is controlled by the depth, which is ignored.

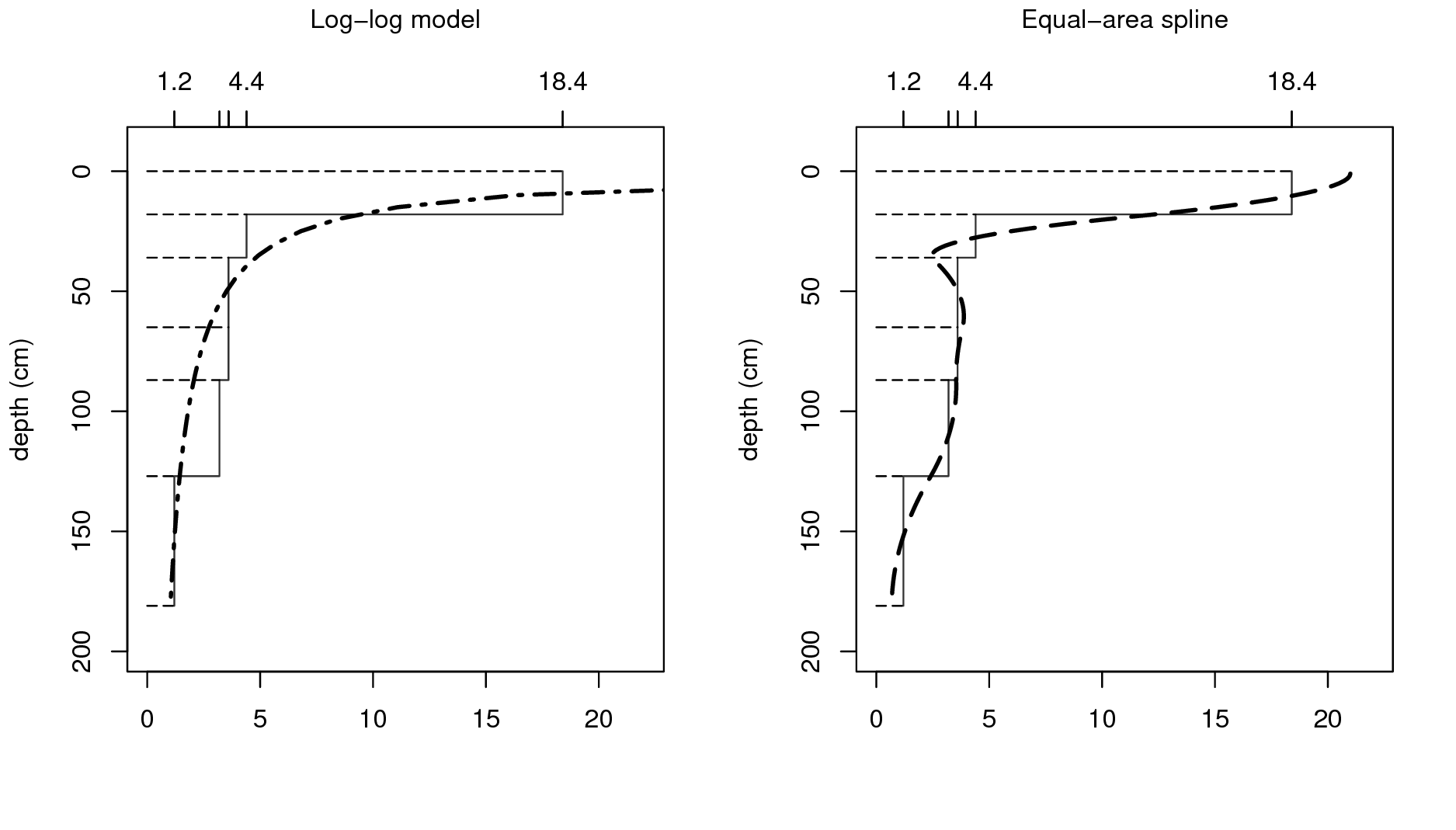

The concept of perfectly homogeneous soil horizons is often too restrictive and can be better replaced with continuous representations of soil vertical variation i.e. soil-depth functions or curves. Variation of soil properties with depth is typically modelled using one of two approaches (Fig. 5.2):

Continuous vertical variation — This assumes that soil variables change continuously with depth. The soil-depth relationship is modelled using either:

Parametric model — The relationship is modelled using mathematical functions such as logarithmic or exponential decay functions.

Non-parametric model — The soil property changes continuously but without obvious regularity with depth. Changes in values are modelled using locally fitted functions such as piecewise linear functions or splines.

Abrupt or stratified vertical variation — This assumes that soil horizons are distinct and homogeneous bodies of soil material and that soil properties are constant within horizons and change abruptly at boundaries between horizons.

Combinations of the two approaches are also possible, such as the use of exponential decay functions per soil horizon (B. Kempen, Brus, and Stoorvogel 2011).

Parametric continuous models are chosen to reflect pedological knowledge e.g. knowledge of soil forming processes. For example, organic carbon usually originates from plant production i.e. litter or roots. Generally, the upper layers of the soil tend to have greater organic carbon content, which decreases continuously with depth, so that the soil-depth relationship can be modelled with a negative-exponential function:

\[\begin{equation} {\texttt{ORC}} (d) = {\texttt{ORC}} (d_0) \cdot \exp(-\tau \cdot d) \tag{5.3} \end{equation}\]where \(\texttt{ORC}(d)\) is the soil organic carbon content at depth (\(d\)), \({\texttt{ORC}} (d_0)\) is the organic carbon content at the soil surface and \(\tau\) is the rate of decrease with depth. This model has only two parameters that must be chosen such that model averages over sampling horizons match those of the observations as closely as possible. Once the model parameters have been estimated, we can easily predict concentrations for any depth interval.

Consider for example this sample profile from Nigeria:

lon = 3.90; lat = 7.50; id = "ISRIC:NG0017"; FAO1988 = "LXp"

top = c(0, 18, 36, 65, 87, 127)

bottom = c(18, 36, 65, 87, 127, 181)

ORCDRC = c(18.4, 4.4, 3.6, 3.6, 3.2, 1.2)

munsell = c("7.5YR3/2", "7.5YR4/4", "2.5YR5/6", "5YR5/8", "5YR5/4", "10YR7/3")

## prepare a SoilProfileCollection:

prof1 <- plyr::join(data.frame(id, top, bottom, ORCDRC, munsell),

data.frame(id, lon, lat, FAO1988), type='inner')

#> Joining by: id

prof1$mdepth <- prof1$top+(prof1$bottom-prof1$top)/2we can fit a log-log model by using e.g.:

d.lm <- glm(ORCDRC ~ log(mdepth), data=prof1, family=gaussian(log))

options(list(scipen=3, digits=2))

d.lm$fitted.values

#> 1 2 3 4 5 6

#> 18.1 6.3 3.5 2.4 1.7 1.2which shows that the log-log fit comes relatively close to the actual values. Another possibility would be to fit a power-law model:

\[\begin{equation} {\texttt{ORC}} (d) = a \cdot d^b \tag{5.4} \end{equation}\]A disadvantage of a single parametric soil property-depth model along the entire soil profile is that these completely ignore stratigraphy and abrupt changes at the boundaries between soil horizons. For example, B. Kempen, Brus, and Stoorvogel (2011) show that there are many cases where highly contrasting layers of peat can be found buried below the surface due to cultivation practices or holocene drift sand. The model given by Eq.(5.4) illustrated in Fig. 5.2 (left) will not be able to represent such abrupt changes.

Non-parametric soil-depth functions are more flexible and can represent observations of soil property averages for sampling layers or horizons more accurately. One such technique that is particularly interesting is equal-area or mass-preserving splines (Bishop, McBratney, and Laslett 1999; Malone et al. 2009) because it ensures that, for each sampling layer (usually a soil horizon), the average of the spline function equals the measured value for the horizon. Disadvantages of the spline model are that it may not fit well if there are few observations along the soil profile and that it may create unrealistic values (through overshoots or extrapolation) in some instances, for example near the surface. Also, mass-preserving splines cannot accommodate discontinuities unless, of course, separate spline functions are fitted above and below the discontinuity.

Figure 5.2: Vertical variation in soil carbon modelled using a logarithmic function (left) and a mass-preserving spline (right) with abrupt changes by horizon ilustrated with solid lines.

To fit mass preserving splines we can use:

library(aqp)

#> This is aqp 1.17

#>

#> Attaching package: 'aqp'

#> The following object is masked from 'package:base':

#>

#> union

library(rgdal)

#> Loading required package: sp

#> rgdal: version: 1.3-6, (SVN revision 773)

#> Geospatial Data Abstraction Library extensions to R successfully loaded

#> Loaded GDAL runtime: GDAL 2.2.2, released 2017/09/15

#> Path to GDAL shared files: /usr/share/gdal/2.2

#> GDAL binary built with GEOS: TRUE

#> Loaded PROJ.4 runtime: Rel. 4.8.0, 6 March 2012, [PJ_VERSION: 480]

#> Path to PROJ.4 shared files: (autodetected)

#> Linking to sp version: 1.3-1

library(GSIF)

#> GSIF version 0.5-5 (2019-01-04)

#> URL: http://gsif.r-forge.r-project.org/

#>

#> Attaching package: 'GSIF'

#> The following object is masked _by_ '.GlobalEnv':

#>

#> munsell

prof1.spc <- prof1

depths(prof1.spc) <- id ~ top + bottom

#> Warning: converting IDs from factor to character

site(prof1.spc) <- ~ lon + lat + FAO1988

coordinates(prof1.spc) <- ~ lon + lat

proj4string(prof1.spc) <- CRS("+proj=longlat +datum=WGS84")

## fit a spline:

ORCDRC.s <- mpspline(prof1.spc, var.name="ORCDRC", show.progress=FALSE)

#> Fitting mass preserving splines per profile...

ORCDRC.s$var.std

#> 0-5 cm 5-15 cm 15-30 cm 30-60 cm 60-100 cm 100-200 cm soil depth

#> 1 21 17 7.3 3.3 3.6 1.8 181where var.std shows average fitted values for standard depth intervals

(i.e. those given in the GlobalSoilMap specifications), and var.1cm

are the values fitted at 1–cm increments

(Fig. 5.2).

A disadvantage of using mathematical functions to convert soil observations at specific depth intervals to continuous values along the whole profile is that these values are only estimates with associated estimation errors. If estimates are treated as if these were observations then an important source of error is ignored, which may jeopardize the quality of the final soil predictions and in particular the associated uncertainty (see further section 5.3). This problem can be avoided by taking, for example, a 3D modelling approach (Poggio and Gimona 2014; Hengl, Heuvelink, et al. 2015), in which model calibration and spatial interpolation are based on the original soil observations directly (although proper use of this requires that the differences in vertical support between measurements are taken into account also). We will address this also in later sections of this chapter, among others in section 6.1.4.

5.1.4 Vertical aggregation of soil properties

As mentioned previously, soil variables refer to aggregate values over specific depth intervals (see Fig. 5.2). For example, the organic carbon content is typically observed per soil horizon with values in e.g. g/kg or permilles (Conant et al. 2010; Baritz et al. 2010; Panagos et al. 2013). The Soil Organic Carbon Storage (or Soil Organic Carbon Stock) in the whole profile can be calculated by using Eq (7.1). Once we have determined soil organic carbon storage (\(\mathtt{OCS}\)) per horizon, we can derive the total organic carbon in the soil by summing over all (\(H\)) horizons:

\[\begin{equation} \mathtt{OCS} = \sum\limits_{h = 1}^H { \mathtt{OCS}_h } \tag{5.5} \end{equation}\]Obviously, the horizon-specific soil organic carbon content (\(\mathtt{ORC}_h\)) and total soil organic carbon content (\(\mathtt{OCS}\)) are NOT the same variables and need to be analysed and mapped separately.

In the case of pH (\(\mathtt{PHI}\)) we usually do not aim at estimating the actual mass or quantity of hydrogen ions. To represent a soil profile with a single number, we may take a weighted mean of the measured pH values per horizon:

\[\begin{equation} \mathtt{PHI} = \sum\limits_{h = 1}^H { w_h \cdot \mathtt{PHI}_h }; \qquad \sum\limits_{h = 1}^H{w_h} = 1 \tag{5.6} \end{equation}\]where the weights can be chosen proportional to the horizon thickness:

\[\begin{equation} w _h = \frac{{\mathtt{HSIZE}_h}}{\sum\limits_{h = 1}^H {{\mathtt{HSIZE}}_h}} \end{equation}\]Thus, it is important to be aware that all soil variables: (A) can be expressed as relative (percentages) or absolute (mass / quantities) values, and (B) refer to specific horizons or depth intervals or to the whole soil profile.

Similar spatial support-effects show up in the horizontal, because soil observations at point locations are not the same as average or bulk soil samples taken by averaging a large number of point observations on a site or plot (Webster and Oliver 2001).

In order to avoid misinterpretation of the results of mapping, we recommend that any delivered map of soil properties should specify the support size in the vertical and lateral directions, the analysis method (detection limit) and measurement units. Such information can be included in the metadata and/or in any key visualization or plot. Likewise, any end-user of soil data should specify whether estimates of the relative or total organic carbon, aggregated or at 2D/3D point support are required.

5.2 Spatial prediction of soil variables

5.2.1 Main principles

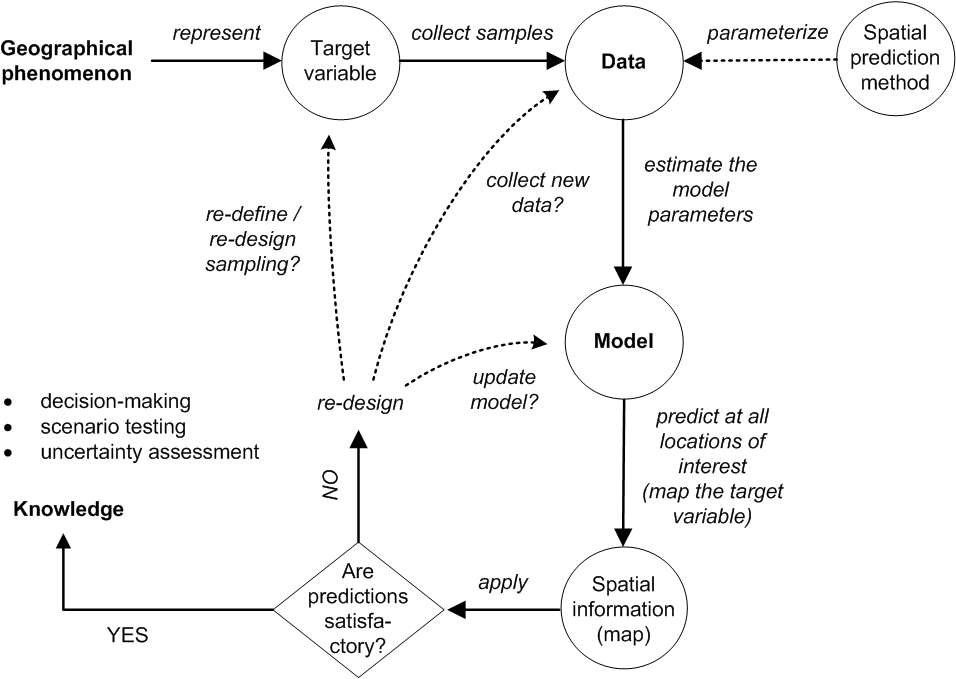

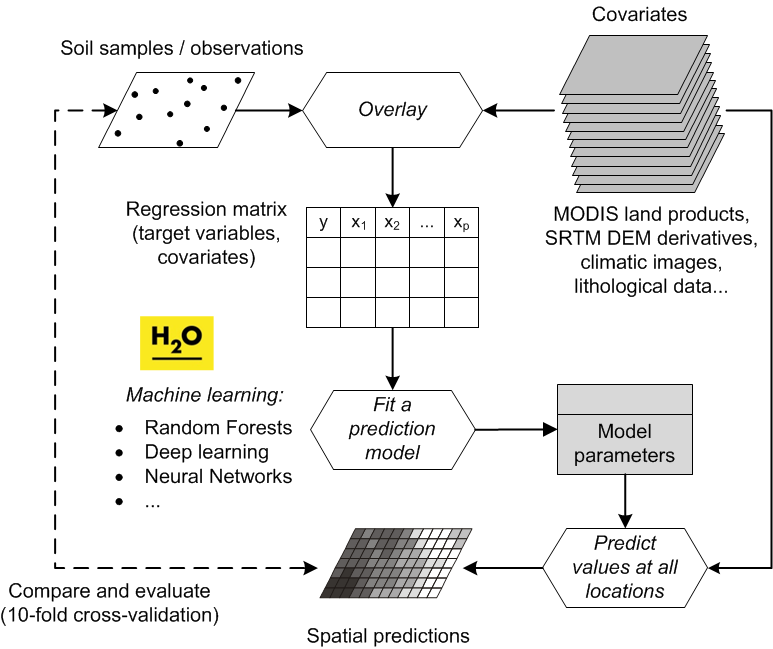

“Pragmatically, the goal of a model is to predict, and at the same time scientists want to incorporate their understanding of how the world works into their models” (Cressie and Wikle 2011). In general terms, spatial prediction consists of the following seven steps (Fig. 5.3):

Select the target variable, scale (spatial resolution) and associated geographical region of interest;

Define a model of spatial variation for the target variable;

Prepare a sampling plan and collect samples and relevant explanatory variables;

Estimate the model parameters using the collected data;

Derive and apply the spatial prediction method associated with the selected model;

Evaluate the spatial prediction outputs and collect new data / run alternative models if necessary;

Use the outputs of the spatial prediction process for decision making and scenario testing.

Figure 5.3: From data to knowledge and back: the general spatial prediction scheme applicable to many environmental sciences.

The spatial prediction process is repeated at all nodes of a grid covering \(D\) (or a space-time domain in case of spatiotemporal prediction) and produces three main outputs:

Estimates of the model parameters (e.g., regression coefficients and variogram parameters), i.e. the model;

Predictions at new locations, i.e. a prediction map;

Estimate of uncertainty associated with the predictions, i.e. a prediction error map.

It is clear from Fig. 5.3 that the key steps in the mapping procedure are: (a) choice of the sampling scheme (e.g. Ng et al. (2018) and Brus (2019)), (b) choice of the model of spatial variation (e.g. Diggle and Ribeiro Jr (2007)), and (c) choice of the parameter estimation technique (e.g. Lark, Cullis, and Welham (2006)). When the sampling scheme is given and cannot be changed, the focus of optimization of the spatial prediction process is then on selecting and fine-tuning the best performing spatial prediction method.

In a geostatistical framework, spatial prediction is estimation of values of some target variable \(Z\) at a new location (\({s}_0\)) given the input data:

\[\begin{equation} \hat Z({s}_0) = E\left\{ Z({s}_0)|z({s}_i), \; {{X}}({s}_0), \; i=1,...,n \right\} \tag{5.7} \end{equation}\]where the \(z({s}_i)\) are the input set of observations of the target variable, \({s}_i\) is a geographical location, \(n\) is the number of observations and \({{X}}({s}_0)\) is a list of covariates or explanatory variables, available at all prediction locations within the study area of interest (\({s} \in \mathbb{A}\)). To emphasise that the model parameters also influence the outcome of the prediction process, this can be made explicit by writing (Cressie and Wikle 2011):

\[\begin{equation} [Z|Y,{{\theta}} ] \tag{5.8} \end{equation}\]where \(Z\) is the data, \(Y\) is the (hidden) process that we are predicting, and \({{\theta}}\) is a list of model parameters e.g. trend coefficients and variogram parameters.

There are many spatial prediction methods for generating spatial

predictions from soil samples and covariate information. All differ in

the underlying statistical model of spatial variation, although this

model is not always made explicit and different methods may use the same

statistical model. A review of currently used digital soil mapping

methods is given, for example, in McBratney et al. (2011), while the most

extensive review can be found in McBratney, Mendonça Santos, and Minasny (2003) and McBratney, Minasny, and Stockmann (2018). Li and Heap (2010) list 40+ spatial prediction / spatial interpolation

techniques. Many spatial prediction methods are often

just different names for essentially the same thing.

What is often known under a single name, in the statistical,

or mathematical literature, can be implemented through

different computational frameworks, and lead to different outputs

(mainly because many models are not written out in the finest detail and leave

flexibility for actual implementation).

5.2.2 Soil sampling

A soil sample is a collection of field observations, usually represented as points. Statistical aspects of sampling methods and approaches are discussed in detail by Schabenberger and Gotway (2005) and de Gruijter et al. (2006), while some more practical suggestions for soil sampling can be found in Pansu, Gautheyrou, and Loyer (2001) Webster and Oliver (2001), Tan (2005), Legros (2006) and Brus (2019). Some general recommendations for soil sampling are:

Points need to cover the entire geographical area of interest and not overrepresent specific subareas that have much different characteristics than the main area.

Soil observations at point locations should be made using consistent measurement methods. Replicates should ideally be taken to quantify the measurement error.

Bulk sampling is recommended when short-distance spatial variation is expected to be large and not of interest to the map user.

If a variogram is to be estimated then the sample size should be >50 and there should be sufficient point pairs with small separation distances.

If trend coefficients are to be estimated then the covariates at sampling points should cover the entire feature space of each covariate.

The sampling design or rationale used to decide where to locate soil profile observations, or sampling points, is often not clear and may vary from case to case. Therefore, there is no guarantee that available legacy point data used as input to geostatistical modelling will satisfy the recommendations listed above. Many of the legacy profile data locations in the world were selected using convenience sampling. In fact, many points in traditional soil surveys may have been selected and sampled to capture information about unusual conditions or to locate boundaries at points of transition and maximum confusion about soil properties (Legros 2006). Once a soil becomes recognized as being widely distributed and dominant in the landscape, field surveyors often choose not to record observations when that soil is encountered, preferring to focus instead on recording unusual sites or areas where soil transition occurs. Thus the population of available soil point observations may not be representative of the true population of soils, with some soils being either over or under-represented.

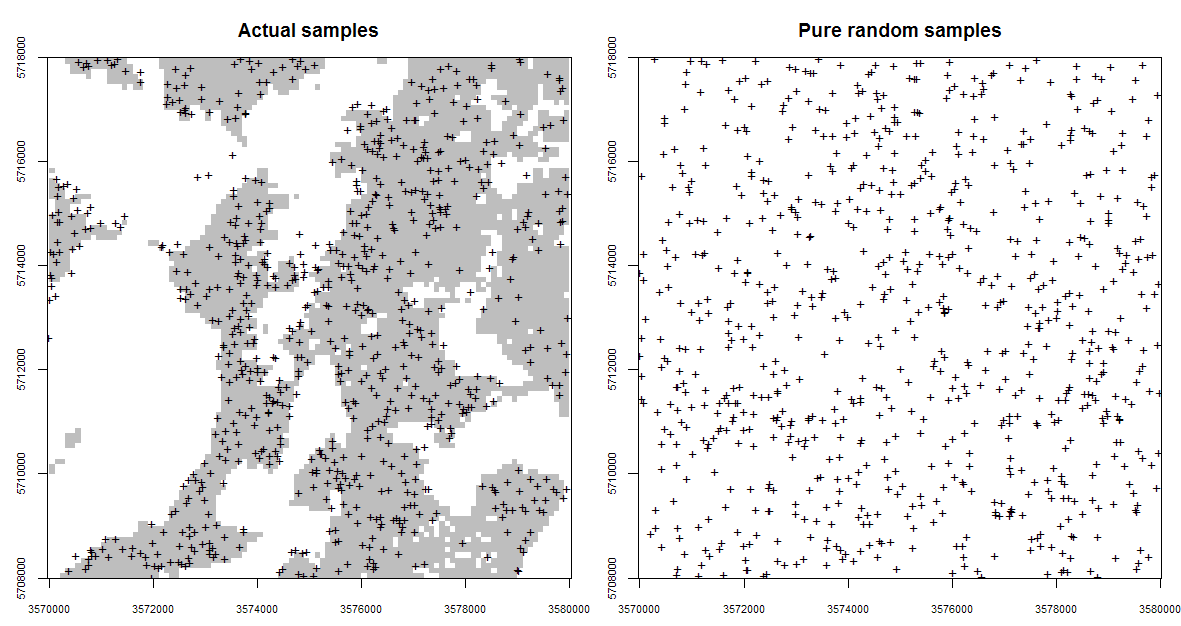

Figure 5.4: Occurrence probabilities derived for the actual sampling locations (left), and for a purely random sample design with exactly the same number of points (right). Probabilities derived using the spsample.prob function from the GSIF package. The shaded area on the left indicates which areas (in the environmental space) have been systematically represented, while the white colour indicates areas which have been systematically omitted (and which is not by chance).

Fig. 5.4 (the Ebergötzen study area) illustrates a problem of dealing with clustered samples and omission of environmental features. Using the actual samples shown in the plot on the left of Fig. 5.4 we would like to map the whole area inside the rectangle. This is technically possible, but the user should be aware that the actual Ebergötzen points systematically miss sampling some environmental features: in this case natural forests / rolling hills that were not of interest to the survey project. This does not mean that the Ebergötzen point data are not applicable for geostatistical analyses. It simply means that the sampling bias and under-representation of specific environmental conditions will lead to spatial predictions that may be biased and highly uncertain under these conditions (Brus and Heuvelink 2007).

5.2.3 Knowledge-driven soil mapping

As mentioned previously in section 1.4.8, knowledge-driven mapping is often based on unstated and unformalized rules and understanding that exists mainly in the minds and memories of the individual soil surveyors who conducted field studies and mapping. Expert, or knowledge-based, information can be converted to mapping algorithms by applying conceptual rules to decision trees and/or statistical models (MacMillan, Pettapiece, and Brierley 2005; Walter, Lagacherie, and Follain 2006; Liu and Zhu 2009). For example, a surveyor can define the classification rules subjectively, i.e. based on his/her knowledge of the area, then iteratively adjust the model until the output maps fit his/her expectation of the distribution of soils (MacMillan et al. 2010).

In areas where few, or no, field observations of soil properties are available, the most common way to produce estimates is to rely on expert knowledge, or to base estimates on data from other, similar areas. This is a kind of ‘knowledge transfer’ system. The best example of a knowledge transfer system is the concept of soil series in the USA (Simonson 1968). Soil series (+phases) are the lowest (most detailed) level classes of soil types typically mapped. Each soil series should consist of pedons having soil horizons that are similar in colour, texture, structure, pH, consistence, mineral and chemical composition, and arrangement in the soil profile.

If one finds the same type of soil series repeatedly at similar locations, then there is little need to sample the soil again at additional, similar, locations and, consequently, soil survey field costs can be reduced. This sounds like an attractive approach because one can minimize the survey costs by focusing on delineating the distribution of soil series only. The problem is that there are >15,000 soil series in the USA (Smith 1986), which obviously means that it is not easy to recognize the same soil series just by doing rapid field observations. In addition, the accuracy with which one can consistently recognize a soil series may well fail on standard kappa statistics tests, indicating that there may be substantial confusion between soil series (e.g. large measurement error).

Large parts of the world basically contain very few (sparce) field records and hence one will need to improvise to be able to produce soil predictions. One idea to map such areas is to build attribute tables for representative soil types, then map the distribution of these soil types in areas without using local field samples. Mallavan, Minasny, and McBratney (2010) refer to soil classes that can be predicted far away from the actual sampling locations as homosoils. The homosoils concept is based on the assumption that locations that share similar environments (e.g. soil-forming factors) are likely to exhibit similar soils and soil properties also.

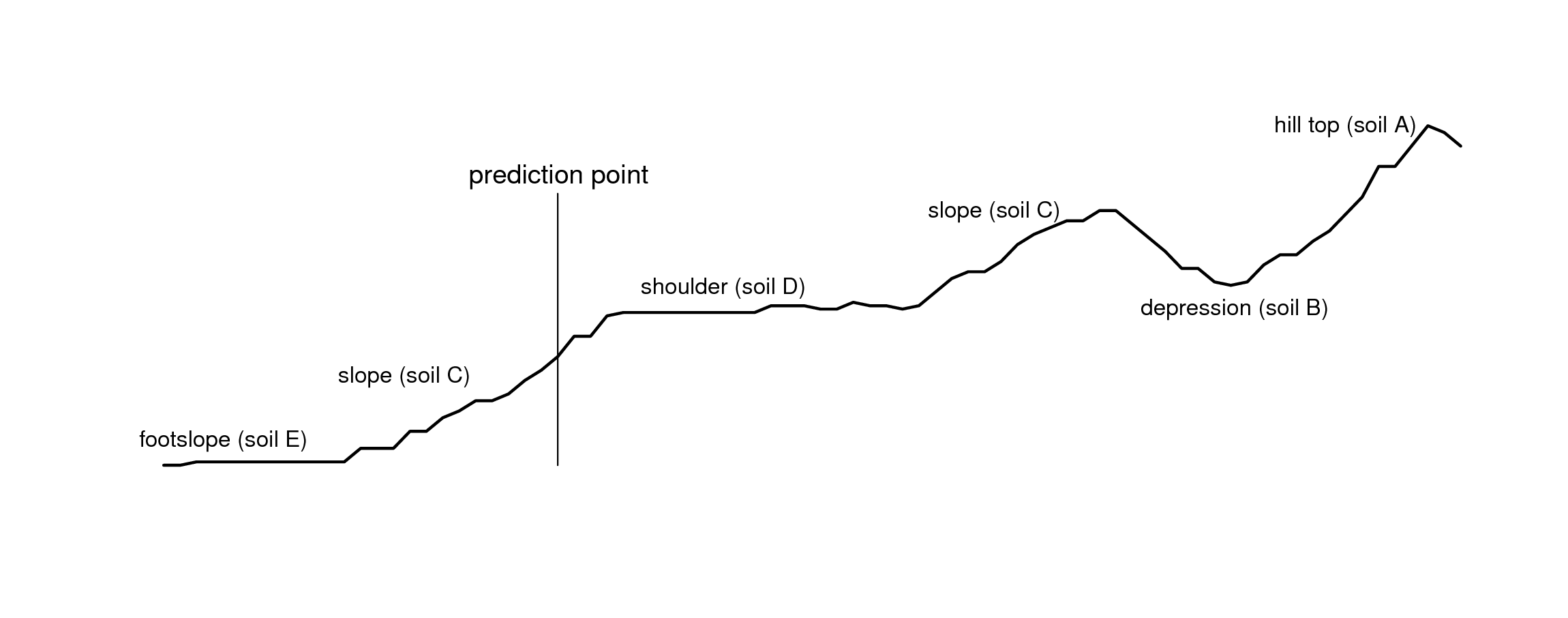

Figure 5.5: Landform positions and location of a prediction point for the Maungawhau data set.

Expert-based systems also rely on using standard mapping paradigms such as the concept of relating soil series occurrance to landscape position along a toposequence, or catena . Fig. 5.5, for example, shows a cross-section derived using the elevation data in Fig. 5.6. An experienced soil surveyor would visit the area and attempt to produce a diagram showing a sequence of soil types positioned along this cross-section. This expert knowledge can be subsequently utilized as manual mapping rules, provided that it is representative of the area, that it can be formalized through repeatable procedures and that it can be tested using real observations.

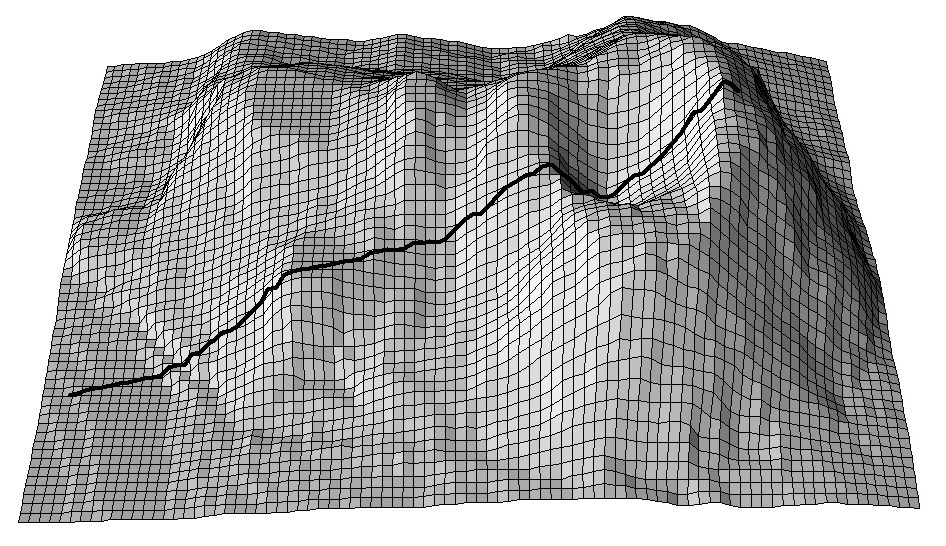

Figure 5.6: A cross-section for the Maungawhau volcano dataset commonly used in R to illustrate DEM and image analysis techniques.

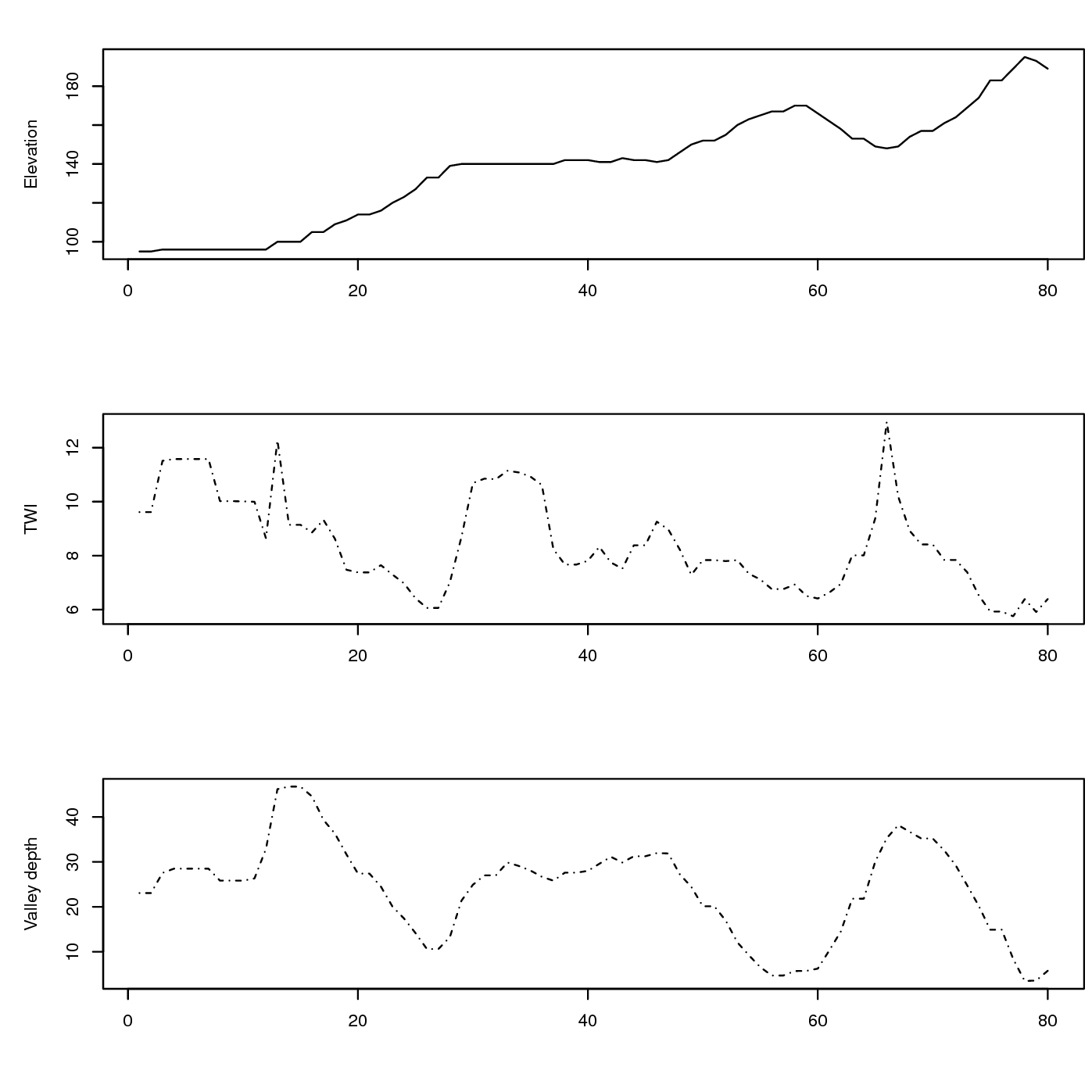

If relevant auxiliary information, such as a Digital Elevation Model (DEM), is available for the study area, one can derive a number of DEM parameters that can help to quantify landforms and geomorphological processes. Landforms can also automatically be classified by computing various DEM parameters per pixel, or by using knowledge from, Fig. 5.7 (a sample of the study area) to objectively extract landforms and associated soils in an area. Such auxiliary landform information can be informative about the spatial distribution of the soil, which is the key principle of, for example, the SOTER methodology (Van Engelen and Dijkshoorn 2012).

The mapping process of knowledge-driven soil mapping can be summarized as follows (MacMillan, Pettapiece, and Brierley 2005; MacMillan et al. 2010):

Sample the study area using transects oriented along topographic cross-sections;

Assign soil types to each landform position and at each sample location;

Derive DEM parameters and other auxiliary data sets;

Develop (fuzzy) rules relating the distribution of soil classes to the auxiliary (mainly topographic) variables;

Implement (fuzzy) rules to allocate soil classes (or compute class probabi;ities) for each grid location;

Generate soil property values for each soil class using representative observations (class centers);

Estimate values of the target soil variable at each grid location using a weighted average of allocated soil class or membership values and central soil property values for each soil class;

Figure 5.7: Associated values of DEM-based covariates: TWI — Topographic Wetness Index and Valley depth for the cross-section from the previous figure.

In mathematical terms, soil property prediction based on fuzzy soil classification values using the SOLIM approach Zhu et al. (2001, 2010) works as follows:

\[\begin{equation} \begin{aligned} \hat z({s}_0) = \sum\limits_{c_j = 1}^{c_p} {\nu _{c_j} ({s}_0) \cdot z_{c_j} }; & \hspace{.6cm} \sum\limits_{c_j = 1}^{c_p} {\nu _j ({s}_0)} = 1\end{aligned} \tag{5.9} \end{equation}\]where \(\hat z({s}_0)\) is the predicted soil attribute at

\({s}_0\), \(\nu _{c_j} ({s}_0)\) is the membership value of class

\(c_j\) at location \({s}_0\), and \(z_{c_j}\) is the modal (or best

representative) value of the inferred soil attribute of the \(c_j\)-th

category. The predicted soil attribute is mapped directly from

membership maps using a linear additive weighing function. Consider the

example of six soil classes A, B, C, D, E and F. The

attribute table indicates that soil type A has 10%, B 10%, C 30%,

D 40%, E 25%, and F 35% of clay. If the membership values at a

grid position are 0.6, 0.2, 0.1, 0.05, 0.00 and 0.00, then

Eq.(5.9) predicts the clay content as 13.5%.

It is obvious from this work flow that the critical aspects that determine the accuracy of the final predictions are the selection of where we locate the cross-sections and the representative soil profiles and the strength of the relationship between the resulting soil classes and target soil properties. Qi et al. (2006), for example, recommended that the most representative values for soil classes can be identified, if many soil profiles are available, by finding the sampling location that occurs at the grid cell with highest similarity value for a particular soil class. Soil mappers are now increasingly looking for ways to combine expert systems with statistical data mining and regression modelling techniques.

One problem of using a supervised mapping system, as described above, is that it is difficult to get an objective estimate of the prediction error (or at least a robust statistical theory for this has not yet been developed). The only possibility to assess the accuracy of such maps would be to collect independent validation samples and estimate the mapping accuracy following the methods described in section 5.3. So, in fact, expert-based systems also depend on statistical sampling and inference for evaluation of the accuracy of the resulting map.

5.2.4 Geostatistics-driven soil mapping (pedometric mapping)

Pedometric mapping is based on using statistical models to predict soil properties, which leads us to the field of geostatistics. Geostatistics treats the soil as a realization of a random process (Webster and Oliver 2001). It uses the point observations and gridded covariates to predict the random process at unobserved locations, which yields conditional probability distributions, whose spread (i.e. standard deviation, width of prediction intervals) explicitly characterizes the uncertainty associated with the predictions. As mentioned previously in section 1.3.6, geostatistics is a data-driven approach to soil mapping in which georeferenced point samples are the key input to map production.

Traditional geostatistics has basically been identified with various ways of variogram modeling and kriging (Haining, Kerry, and Oliver 2010). Contemporary geostatistics extends linear models and plain kriging techniques to non-linear and hybrid models; it also extends purely spatial models (2D) to 3D and space-time models (Schabenberger and Gotway 2005; Bivand, Pebesma, and Rubio 2008; Diggle and Ribeiro Jr 2007; Cressie and Wikle 2011). Implementation of more sophisticated geostatistical models for soil mapping is an ongoing activity and is quite challenging (computationally), especially in the case of fine-resolution mapping of large areas (Hengl, Mendes de Jesus, et al. 2017).

Note also that geostatistical mapping is often restricted to quantitative soil properties. Soil prediction models that predict categorical soil variables such as soil type or soil colour class are often quite complex (see e.g. Hengl, Toomanian, et al. (2007) and Kempen et al. (2009) for a discussion). Most large scale soil mapping projects also require predictions in 3D, or at least 2D predictions (layers) for several depth intervals. This can be done by treating each layer separately in a 2D analysis, possibly by taking vertical correlations into account, but also by direct 3D geostatistical modelling. Both approaches are reviewed in the following sections.

Over the last decade statisticians have recommended using model-based geostatistics as the most reliable framework for spatial predictions. The essence of model-based statistics is that “the statistical methods are derived by applying general principles of statistical inference based on an explicitly declared stochastic model of the data generating mechanism” (Diggle and Ribeiro Jr 2007; P. E. Brown 2015). This avoids ad hoc, heuristic solution methods and has the advantage that it yields generic and portable solutions. Some examples of diverse geostatistical models are given in P. E. Brown (2015).

The basic geostatistical model treats the soil property of interest as the sum of a deterministic trend and a stochastic residual:

\[\begin{equation} Z({s}) = m({s}) + \varepsilon({s}) \tag{5.10} \end{equation}\]where \(\varepsilon\) and hence \(Z\) are normally distributed stochastic processes. This is the same model as that presented in Eq.(5.1), with in this case \(\varepsilon = \varepsilon ' + \varepsilon ''\) being the sum of the spatially correlated and spatially uncorrelated stochastic components. The mean of \(\varepsilon\) is taken to be zero. Note that we use capital letter \(Z\) because we use a probabilistic model, i.e. we treat the soil property as an outcome of a stochastic process and define a model of that stochastic process. Ideally, the spatial variation of the stochastic residual of Eq.(5.10) is much less than that of the dependent variable.

When the assumption of normality is not realistic, such as when the frequency distribution of the residuals at observation locations is very skewed, the easiest solution is to take a Transformed Gaussian approach (Diggle and Ribeiro Jr 2007 ch3.8) in which the Gaussian geostatistical model is formulated for a transformation of the dependent variable (e.g. logarithmic, logit, square root, Box-Cox transform). A more advanced approach would drop the normal distribution approach entirely and assume a Generalized Linear Geostatistical Model (Diggle and Ribeiro Jr 2007; P. E. Brown 2015) but this complicates the statistical analysis and prediction process dramatically. The Transformed Gaussian approach is nearly as simple as the Gaussian approach although the back-transformation requires attention, especially when the spatial prediction includes a change of support (leading to block kriging). If this is the case, it may be necessary to use a stochastic simulation approach and derive the predictions and associated uncertainty (i.e. the conditional probability distribution) using numerical simulations.

The trend part of Eq.(5.10) (i.e. \(m\)) can take many forms. In the simplest case it would be a constant but usually it is taken as some function of known, exhaustively available covariates. This is where soil mapping can benefit from other sources of information and can implement Jenny’s State Factor Model of soil formation (Jenny, Salem, and Wallis 1968; Jenny 1994; Heuvelink and Webster 2001; McBratney et al. 2011), which has been known from the time of Dokuchaev (Florinsky 2012). The covariates are often maps of environmental properties that are known to be related to the soil property of interest (e.g. elevation, land cover, geology) but could also be the outcome of a mechanistic soil process model (such as a soil acidification model, a soil nutrient leaching model or a soil genesis model). In the case of the latter one might opt for taking \(m\) equal to the output of the deterministic model, but when the covariates are related environmental properties one must define a structure for \(m\) and introduce parameters to be estimated from paired observations of the soil property and covariates. One of the simplest approaches is to use multiple linear regression to predict values at some new location \({s}_0\) (Kutner et al. 2005):

\[\begin{equation} m({s}_0 ) = \sum\limits_{j = 0}^p { \beta _j \cdot X_j ({s}_0 )} \tag{5.11} \end{equation}\]where \(\beta _j\) are the regression model coefficients, \(\beta _0\) is the intercept, \(j=1,\ldots,p\) are covariates or explanatory variables (available at all locations within the study area of interest \(\mathbb{A}\)), and \(p\) is the number of covariates. Eq.(5.11) can also include categorical covariates (e.g. maps of land cover, geology, soil type) by representing these by as many binary dummy variables as there are categories (minus one, to be precise, since an intercept is included in the model). In addition, transformed covariates may also be included or interactions between covariates. The latter is achieved by extending the set of covariates with products or other mixtures of covariates. However, note that this will dramatically increase the number of covariates. The risk of considering a large number of covariates is that it may become difficult to obtain reliable estimates of the regression coefficients. Also one may run the risk of multicollinearity — the property of covariates being mutually strongly correlated (as indicated by Jenny, Salem, and Wallis (1968) already in (1968)).

The advantage of Eq.(5.11) is that it is linear in the unknown coefficients, which makes their estimation relatively straightforward and also permits derivation of the uncertainty about the regression coefficients (\(\beta\)). However, in many practical cases, the linear formulation may be too restrictive and that is why alternative structures have been extensively developed to establish the relationship between the dependent and covariates. Examples of these so-called ‘statistical learning’ and/or ‘machine learning’ approaches are:

artificial neural networks (Yegnanarayana 2004),

classification and regression trees (Breiman 1993),

support vector machines (Hearst et al. 1998),

computer-based expert systems,

Statistical treatment of many of these methods is given in Hastie, Tibshirani, and Friedman (2009) and Kuhn and Johnson (2013). Care needs to be taken when using machine learning techniques, such as random forest, because such techniques are more sensitive to noise and blunders in the data.

Most methods listed above require appropriate levels of expertise to avoid pitfalls and incorrect use but, when feasible and used properly, these methods should extract maximal information about the target variable from the covariates (Statnikov, Wang, and Aliferis 2008; Kanevski, Timonin, and Pozdnukhov 2009).

The trend (\(m\)) relates covariates to soil properties and for this it uses a soil-environment correlation model — the so-called CLORPT model, which was formulated by Jenny in 1941 (a (1994) reprint from that book is also available). McBratney, Mendonça Santos, and Minasny (2003) further formulated an extension of the CLORPT model known as the “SCORPAN” model.

The CLORPT model may be written as (Jenny 1994; Florinsky 2012):

\[\begin{equation} S = f (cl, o, r, p, t) \tag{5.12} \end{equation}\]where \(S\) stands for soil (properties and classes), \(cl\) for climate, \(o\) for organisms (including humans), \(r\) is relief, \(p\) is parent material or geology and \(t\) is time. In other words, we can assume that the distribution of both soil and vegetation (at least in a natural system) can be at least partially explained by environmental conditions. Eq.(5.12) suggests that soil is a result of environmental factors, while in reality there are many feedbacks and soil, in turn, influences many of the factors on the right-hand side of Eq.(5.12), such as \(cl\), \(o\) and \(r\).

Uncertainty about the estimation errors of model coefficients can fairly easily be taken into account in the subsequent prediction analysis if the model is linear in the coefficients, such as in Eq.(5.11). In this book we therefore restrict ourselves to this case but allow that the \(X_j\)’s in Eq.(5.11) are derived in various ways.

Since the stochastic residual of Eq.(5.10) is normally distributed and has zero mean, only its variance-covariance remains to be specified:

\[\begin{equation} C\left[Z({s}),Z({s}+{h})\right] = \sigma (s) \cdot \sigma(s+{h}) \cdot \rho ({h}) \end{equation}\]where \({{h}}\) is the separation distance between two locations. Note that here we assumed that the correlation function \(\rho\) is invariant to geographic translation (i.e., it only depends on the distance \({h}\) between locations and not on the locations themselves). If in addition the standard deviation \(\sigma\) would be spatially invariant then \(C\) would be second-order stationary. These type of simplifying assumptions are needed to be able to estimate the variance-covariance structure of \(C\) from the observations. If the standard deviation is allowed to vary with location, then it could be defined in a similar way as in Eq.(5.11). The correlation function \(\rho\) would be parameterised to a common form (e.g. exponential, spherical, Matérn), thus ensuring that the model is statistically valid and positive-definite. It is also quite common to assume isotropy, meaning that two-dimensional geographic distance \({{h}}\) can be reduced to one-dimensional Euclidean distance \(h\).

Once the model has been defined, its parameters must be estimated from the data. These are the regression coefficients of the trend (when applicable) and the parameters of the variance-covariance structure of the stochastic residual. Commonly used estimation methods are least squares and maximum likelihood. Both methods have been extensively described in the literature (e.g. Webster and Oliver (2001) and Diggle and Ribeiro Jr (2007)). More complex trend models may also use the same techniques to estimate their parameters, although they might also need to rely on more complex parameter estimation methods such as genetic algorithms and simulated annealing (Lark and Papritz 2003).

The optimal spatial prediction in the case of a model Eq.(5.10) with a linear trend Eq.(5.11) and a normally distributed residual is given by the well-kown Best Linear Unbiased Predictor (BLUP):

\[\begin{equation} \hat z({{{s}}_0}) = {{X}}_{{0}}^{{T}}\cdot \hat{{\beta}} + \hat{{\lambda}}_{{0}}^{{T}}\cdot({{z}} - {{X}}\cdot \hat{{\beta}} ) \tag{5.13} \end{equation}\]where the regression coefficients and kriging weights are estimated using:

\[\begin{equation} \begin{aligned} \hat{{\beta}} &= {\left( {{{{X}}^{{T}}}\cdot{{{C}}^{ - {{1}}}}\cdot{{X}}} \right)^{ - {{1}}}}\cdot{{{X}}^{{T}}}\cdot{{{C}}^{ - {{1}}}}\cdot{{z}} \\ \hat{{\lambda}}_{{0}} &= {C}^{ - {{1}}} \cdot {{c}}_{{0}} \notag\end{aligned} \tag{5.14} \end{equation}\]and where \({{X}}\) is the matrix of \(p\) predictors at the \(n\) sampling locations, \(\hat{{\beta}}\) is the vector of estimated regression coefficients, \({C}\) is the \(n\)\(n\) variance-covariance matrix of residuals, \({c}_{{0}}\) is the vector of \(n\)\(1\) covariances at the prediction location, and \({\lambda}_{{0}}\) is the vector of \(n\) kriging weights used to interpolate the residuals. Derivation of BLUP for spatial data can be found in many standard statistical books e.g. Stein (1999), Christensen (2001, 277), Venables and Ripley (2002, 425–30) and/or Schabenberger and Gotway (2005).

Any form of kriging computes the conditional distribution of \(Z({{s}}_0)\) at an unobserved location \({{s}}_0\) from the observations \(z({{s}}_1 )\), \(z({{s}}_2 ), \ldots , z({{s}}_n )\) and the covariates \({{X}}({{s}}_0)\) (matrix of size \(p \times n\)). From a statistical perspective this is straightforward for the case of a linear model and normally distributed residuals. However, solving large matrices and more sophisticated model fitting algorithms such as restricted maximum likelihood can take a significant amount of time if the number of observations is large and/or the prediction grid dense. Pragmatic approaches to addressing constraints imposed by large data sets are to constrain the observation data set to local neighbourhoods or to take a multiscale nested approach.

Kriging not only yields optimal predictions but also quantifies the prediction error with the kriging standard deviation. Prediction intervals can be computed easily because the prediction errors are normally distributed. Alternatively, uncertainty in spatial predictions can also be quantified with spatial stochastic simulation. While kriging yields the ‘optimal’ prediction of the soil property at any one location, spatial stochastic simulation yields a series of possible values by sampling from the conditional probability distribution. In this way a large number of ‘realizations’ can be generated, which can be useful when the resulting map needs to be back-transformed or when it is used in a spatial uncertainty propagation analysis. Spatial stochastic simulation of the linear Gaussian model can be done using a technique known as sequential Gaussian simulation (Goovaerts 1997; Yamamoto 2008). It is not, in principal, more difficult than kriging but it is certainly numerically more demanding i.e. takes significantly more time to compute.

5.2.5 Regression-kriging (generic model)

Ignoring the assumptions about the cross-correlation between the trend and residual components, we can extend the regression-kriging model and use any type of (non-linear) regression to predict values ( e.g. regression trees, artificial neural networks and other machine learning models), calculate residuals at observation locations, fit a variogram for these residuals, interpolate the residuals using ordinary or simple kriging, and add the result to the predicted regression part. This means that RK can, in general, be formulated as:

\[\begin{equation} {\rm prediction} \; = \; \begin{matrix} {\rm trend} \; {\rm predicted} \\ {\rm using} \; {\rm regression} \end{matrix} \; + \; \begin{matrix} {\rm residual} \; {\rm predicted} \\ {\rm using} \; {\rm kriging} \end{matrix} \tag{5.15} \end{equation}\]Again, statistical inference and prediction is relatively simple if the stochastic residual, or a transformation thereof, may be assumed normally distributed. Error of the regression-kriging model is likewise a sum of the regression and the kriging model errors.

5.2.6 Spatial Prediction using multiple linear regression

The predictor \(\hat Y({{ s}_0})\) of \(Y({{ s}_0})\) is typically taken as a function of covariates and the \(Y({ s}_i)\) which, upon substitution of the observations \(y({ s}_i)\), yields a (deterministic) prediction \(\hat y({{ s}_0})\). In the case of multiple linear regression (MLR), model assumptions state that at any location in \(D\) the dependent variable is the sum of a linear combination of the covariates at that location and a zero-mean normally distributed residual. Thus, at the \(n\) observation locations we have:

\[\begin{equation} { Y} = { X}^{{ T}} \cdot { \beta} + { \varepsilon} \tag{5.16} \end{equation}\]where \({ Y}\) is a vector of the target variable at the \(n\) observation locations, \({ X}\) is an \(n \times p\) matrix of covariates at the same locations and \({ \beta}\) is a vector of \(p\) regression coefficients. The stochastic residual \({ \varepsilon}\) is assumed to be independently and identically distributed. The paired observations of the target variable and covariates (\({ y}\) and \({ X}\)) are used to estimate the regression coefficients using, e.g., Ordinary Least Squares (Kutner et al. 2004):

\[\begin{equation} \hat{{ \beta}} = \left( {{{ X}}^{{ T}} \cdot {{ X}}} \right)^{ - {{ 1}}} \cdot {{ X}}^{{ T}} \cdot {{ y}} \tag{5.17} \end{equation}\]once the coefficients are estimated, these can be used to generate a prediction at \({ s}_0\):

\[\begin{equation} \hat y({ s}_0) = { x}_0^{ T} \cdot { \hat \beta} \end{equation}\]with associated prediction error variance:

\[\begin{equation} \sigma ^2 ({ s}_0 ) = var\left[ \varepsilon ({ s}_0) \right] \cdot \left[ {1 + {\mathbf x}_0^{\rm T} \cdot \left( {{\mathbf X}^{\rm T} \cdot {\mathbf X}} \right)^{ - {\mathbf 1}} \cdot {\mathbf x}_0 } \right] \tag{5.18} \end{equation}\]here, \({\mathbf x}_0\) is a vector with covariates at the prediction location and \(var\left[ \varepsilon ({ s}_0) \right]\) is the variance of the stochastic residual. The latter is usually estimated by the mean squared error (MSE):

\[\begin{equation} {\mathrm{MSE}} = \frac{\sum\limits_{i = 1}^n {(y_i - \hat y_i)^2}}{n-p} \end{equation}\]The prediction error variance given by Eq.(5.18) is smallest at prediction points where the covariate values are in the center of the covariate (‘feature’) space and increases as predictions are made further away from the center. They are particularly large in case of extrapolation in feature space (Kutner et al. 2004). Note that the model defined in Eq.(5.16) is a non-spatial model because the observation locations and spatial-autocorrelation of the dependent variable are not taken into account.

5.2.7 Universal kriging prediction error

In the case of universal kriging, regression-kriging or Kriging with External Drift, the prediction error is computed as (Christensen 2001):

\[\begin{equation} \hat \sigma _{\tt{UK}}^2 ({{s}}_0 ) = (C_0 + C_1 ) - {{c}}_{{0}}^{{T}} \cdot {{C}}^{ - {{1}}} \cdot {{c}}_{{0}} + \theta_0 \tag{5.19} \end{equation}\] \[\begin{equation} \theta_0 = \left( {{{X}}_{{0}} - {{X}}^{{T}} \cdot {{C}}^{ - {{1}}} \cdot {{c}}_{{0}} } \right)^{{T}} \cdot \left( {{{X}}^{{T}} \cdot {{C}}^{ - {{1}}} \cdot {{X}}} \right)^{{{ - 1}}} \cdot \left( {{{X}}_{{0}} - {{X}}^{{T}} \cdot {{C}}^{ - {{1}}} \cdot {{c}}_{{0}} } \right) \tag{5.20} \end{equation}\]where \(C_0 + C_1\) is the sill variation (variogram parameters), \({C}\) is the covariance matrix of the residuals, and \({{c}}_0\) is the vector of covariances of residuals at the unvisited location.

Ignoring the mixed component of the prediction variance in Eq.(5.20), one can also derive a simplified regression-kriging variance i.e. as a sum of the kriging variance and the standard error of estimating the regression mean:

\[\begin{equation} \hat \sigma _{\tt{RK}}^2 ({{s}}_0) = (C_0 + C_1 ) - {{c}}_{{0}}^{{T}} \cdot {{C}}^{ - {{1}}} \cdot {{c}}_{{0}} + {\it{SEM}}^2 \tag{5.21} \end{equation}\]Note that there will always be a small difference between results of Eq.(5.19) and Eq.(5.21), and this is a major disadvantage of using the general regression-kriging framework for spatial prediction. Although the predicted mean derived by using regression-kriging or universal kriging approaches might not differ, the estimate of the prediction variance using Eq.(5.21) will be suboptimal as it ignores product component. On the other hand, the advantage of running separate regression and kriging predictions is often worth the sacrifice as the computing time is an order of magnitude shorter and we have more flexibility to combine different types of regression models with kriging when regression is run separately from kriging (Hengl, Heuvelink, and Rossiter 2007).

5.2.8 Regression-kriging examples

The type of regression-kriging model explained in the previous section can be implemented here by combining the regression and geostatistics packages. Consider for example the Meuse case study:

library(gstat)

demo(meuse, echo=FALSE)We can overlay the points and grids to create the regression matrix by:

meuse.ov <- over(meuse, meuse.grid)

meuse.ov <- cbind(as.data.frame(meuse), meuse.ov)

head(meuse.ov[,c("x","y","dist","soil","om")])

#> x y dist soil om

#> 1 181072 333611 0.0014 1 13.6

#> 2 181025 333558 0.0122 1 14.0

#> 3 181165 333537 0.1030 1 13.0

#> 4 181298 333484 0.1901 2 8.0

#> 5 181307 333330 0.2771 2 8.7

#> 6 181390 333260 0.3641 2 7.8which lets us fit a linear model for organic carbon as a function of distance to river and soil type:

m <- lm(log1p(om)~dist+soil, meuse.ov)

summary(m)

#>

#> Call:

#> lm(formula = log1p(om) ~ dist + soil, data = meuse.ov)

#>

#> Residuals:

#> Min 1Q Median 3Q Max

#> -1.0831 -0.1504 0.0104 0.2098 0.5913

#>

#> Coefficients:

#> Estimate Std. Error t value Pr(>|t|)

#> (Intercept) 2.3421 0.0425 55.05 < 2e-16 ***

#> dist -0.8009 0.1787 -4.48 0.0000147 ***

#> soil2 -0.3358 0.0702 -4.78 0.0000041 ***

#> soil3 0.0366 0.1247 0.29 0.77

#> ---

#> Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

#>

#> Residual standard error: 0.33 on 149 degrees of freedom

#> (2 observations deleted due to missingness)

#> Multiple R-squared: 0.384, Adjusted R-squared: 0.371

#> F-statistic: 30.9 on 3 and 149 DF, p-value: 1.32e-15Next, we can derive the regression residuals and fit a variogram:

meuse.s <- meuse[-m$na.action,]

meuse.s$om.res <- resid(m)

vr.fit <- fit.variogram(variogram(om.res~1, meuse.s), vgm(1, "Exp", 300, 1))

vr.fit

#> model psill range

#> 1 Nug 0.048 0

#> 2 Exp 0.065 285With this, all model parameters (four regression coefficients and three variogram parameters) for regression-kriging have been estimated and the model can be used to generate predictions. Note that the regression model we fitted is significant, and the remaining residuals still show spatial auto-correlation. The nugget variation is about 1/3rd of the sill variation.

Using the gstat package (Pebesma 2004; Bivand, Pebesma, and Rubio 2013), regression and kriging can be combined by running universal kriging or kriging with external drift (Hengl, Heuvelink, and Rossiter 2007). First, the variogram of the residuals is calculated:

v.s <- variogram(log1p(om)~dist+soil, meuse.s)

vr.fit <- fit.variogram(v.s, vgm(1, "Exp", 300, 1))

vr.fit

#> model psill range

#> 1 Nug 0.048 0

#> 2 Exp 0.065 285which gives almost the same model parameter values as the

regression-kriging above. Next, the kriging can be executed with a

single call to the generic krige function:

om.rk <- krige(log1p(om)~dist+soil, meuse.s, meuse.grid, vr.fit)

#> [using universal kriging]The package nlme fits the regression model and the variogram of the residuals concurrently (Pinheiro and Bates 2009):

library(nlme)

m.gls <- gls(log1p(om)~dist+soil, meuse.s, correlation=corExp(nugget=TRUE))

m.gls

#> Generalized least squares fit by REML

#> Model: log1p(om) ~ dist + soil

#> Data: meuse.s

#> Log-restricted-likelihood: -26

#>

#> Coefficients:

#> (Intercept) dist soil2 soil3

#> 2.281 -0.623 -0.244 -0.057

#>

#> Correlation Structure: Exponential spatial correlation

#> Formula: ~1

#> Parameter estimate(s):

#> range nugget

#> 2.00 0.07

#> Degrees of freedom: 153 total; 149 residual

#> Residual standard error: 0.34In this case, the regression coefficients have been estimated using Eq.(5.14) i.e. via Restricted maximum likelihood (REML). The advantage of fitting the regression model and spatial autocorrelation structure concurrently is that both fits are adjusted: the estimation of the regression coefficients is adjusted for spatial autocorrelation of the residual and the variogram parameters are adjusted for the adjusted trend estimate. A disadvantage of using the nlme package is that the computational intensity increases with the size of the data set, so for any data set >1000 points the computation time can increase to tens of hours of computing. On the other hand, coefficients fitted by REML methods might not result in significantly better predictions. Getting the most objective estimate of the model parameters is sometimes not worth the effort, as demonstrated by Minasny and McBratney (2007).

Simultaneous estimation of regression coefficients and variogram parameters and including estimation errors in regression coefficients into account by using universal kriging / kriging with external drift is more elegant from a statistical point of view, but there are computational and other challenges. One of these is that it is difficult to implement global estimation of regression coefficients with local spatial prediction of residuals, which is a requirement in the case of large spatial data sets. Also, the approach does not extend to more complex non-linear trend models. In such cases, we recommend separating trend estimation from kriging of residuals by using the regression-kriging approach discussed above (Eq.(5.15)).

5.2.9 Regression-kriging examples using the GSIF package

In the GSIF package, most of the steps described above (regression modelling and variogram modelling) used to fit regression-kriging models are wrapped into generic functions. A regression-kriging model can be fitted in one step by running:

omm <- fit.gstatModel(meuse, log1p(om)~dist+soil, meuse.grid)

#> Fitting a linear model...

#> Fitting a 2D variogram...

#> Saving an object of class 'gstatModel'...

str(omm, max.level = 2)

#> Formal class 'gstatModel' [package "GSIF"] with 4 slots

#> ..@ regModel :List of 32

#> .. ..- attr(*, "class")= chr [1:2] "glm" "lm"

#> ..@ vgmModel :'data.frame': 2 obs. of 9 variables:

#> .. ..- attr(*, "singular")= logi FALSE

#> .. ..- attr(*, "SSErr")= num 0.00000107

#> .. ..- attr(*, "call")= language gstat::fit.variogram(object = svgm, model = ivgm)

#> ..@ svgmModel:'data.frame': 15 obs. of 6 variables:

#> .. ..- attr(*, "direct")='data.frame': 1 obs. of 2 variables:

#> .. ..- attr(*, "boundaries")= num [1:16] 0 106 213 319 426 ...

#> .. ..- attr(*, "pseudo")= num 0

#> .. ..- attr(*, "what")= chr "semivariance"

#> ..@ sp :Formal class 'SpatialPointsDataFrame' [package "sp"] with 5 slotsthe resulting gstatModel class object consists of a (1) regression

component, (2) variogram model for residual, and (3) sample variogram

for plotting, (4) spatial locations of observations used to fit the model. To predict values of organic carbon using this model, we can run:

om.rk <- predict(omm, meuse.grid)

#> Subsetting observations to fit the prediction domain in 2D...

#> Generating predictions using the trend model (RK method)...

#> [using ordinary kriging]

#>

100% done

#> Running 5-fold cross validation using 'krige.cv'...

#> Creating an object of class "SpatialPredictions"

om.rk

#> Variable : om

#> Minium value : 1

#> Maximum value : 17

#> Size : 153

#> Total area : 4964800

#> Total area (units) : square-m

#> Resolution (x) : 40

#> Resolution (y) : 40

#> Resolution (units) : m

#> GLM call formula : log1p(om) ~ dist + soil

#> Family : gaussian

#> Link function : identity

#> Vgm model : Exp

#> Nugget (residual) : 0.048

#> Sill (residual) : 0.065

#> Range (residual) : 285

#> RMSE (validation) : 2.4

#> Var explained : 49.4%

#> Effective bytes : 295

#> Compression method : gzip

## back-transformation:

meuse.grid$om.rk <- expm1(om.rk@predicted$om + om.rk@predicted$var1.var/2)

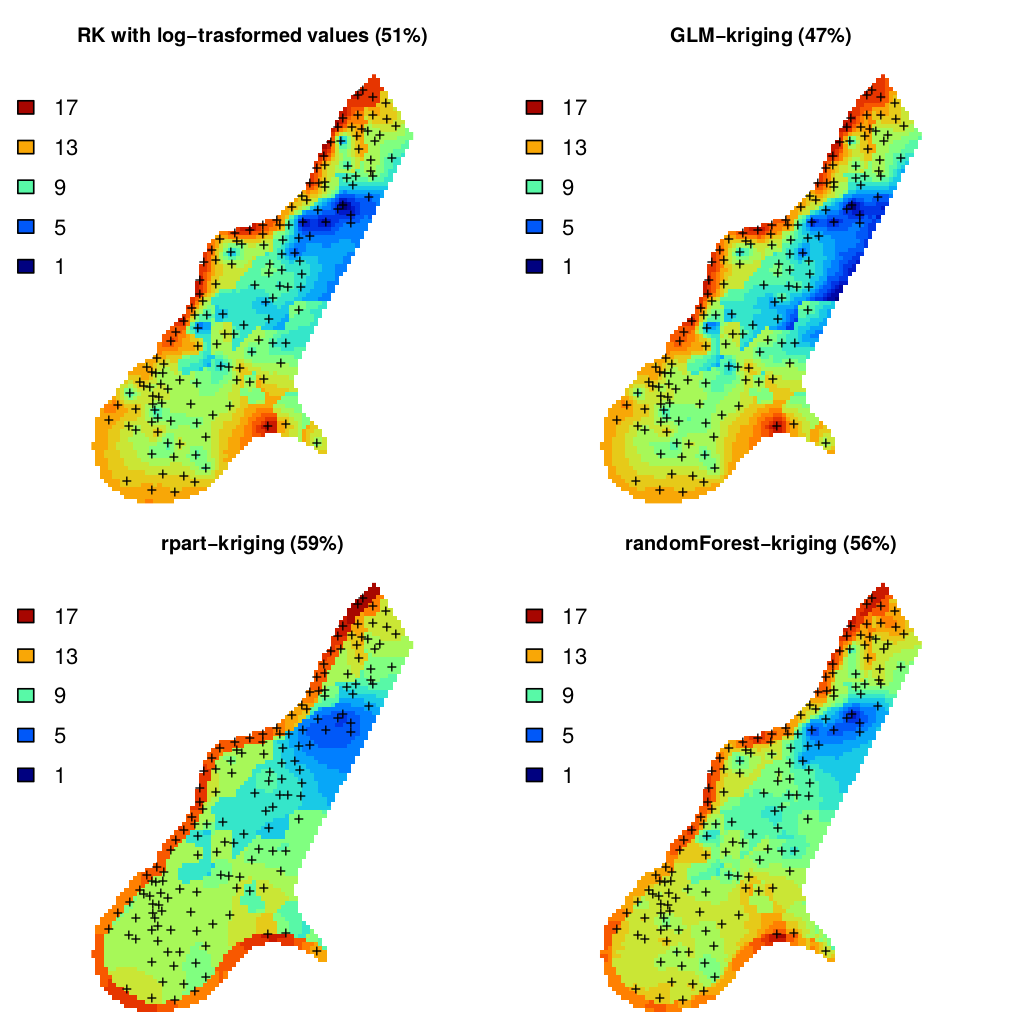

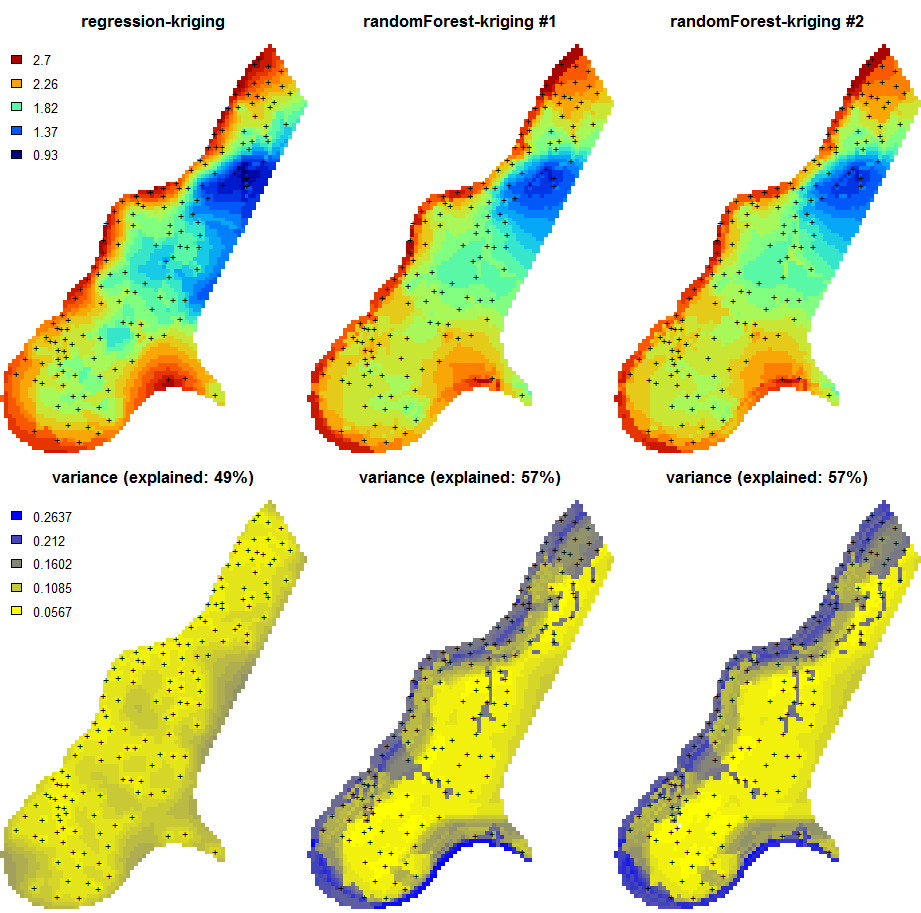

Figure 5.8: Predictions of organic carbon in percent (top soil) for the Meuse data set derived using regression-kriging with transformed values, GLM-kriging, regression tress (rpart) and random forest models combined with kriging. The percentages in brackets indicates amount of variation explained by the models.

We could also have opted for fitting a GLM with a link function, which would look like this:

omm2 <- fit.gstatModel(meuse, om~dist+soil, meuse.grid, family=gaussian(link=log))

#> Fitting a linear model...

#> Fitting a 2D variogram...

#> Saving an object of class 'gstatModel'...

summary(omm2@regModel)

#>

#> Call:

#> glm(formula = om ~ dist + soil, family = fit.family, data = rmatrix)

#>

#> Deviance Residuals:

#> Min 1Q Median 3Q Max

#> -7.066 -1.492 -0.281 1.635 7.401

#>

#> Coefficients:

#> Estimate Std. Error t value Pr(>|t|)

#> (Intercept) 10.054 0.348 28.88 < 2e-16 ***

#> dist -8.465 1.461 -5.79 4e-08 ***

#> soil2 -2.079 0.575 -3.62 0.00041 ***

#> soil3 0.708 1.021 0.69 0.48913

#> ---

#> Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

#>

#> (Dispersion parameter for gaussian family taken to be 7.2)

#>

#> Null deviance: 1791.4 on 152 degrees of freedom

#> Residual deviance: 1075.5 on 149 degrees of freedom

#> (2 observations deleted due to missingness)

#> AIC: 742.6

#>

#> Number of Fisher Scoring iterations: 2

om.rk2 <- predict(omm2, meuse.grid)

#> Subsetting observations to fit the prediction domain in 2D...

#> Generating predictions using the trend model (RK method)...

#> [using ordinary kriging]

#>

100% done

#> Running 5-fold cross validation using 'krige.cv'...

#> Creating an object of class "SpatialPredictions"or fitting a regression tree:

omm3 <- fit.gstatModel(meuse, log1p(om)~dist+soil, meuse.grid, method="rpart")

#> Fitting a regression tree model...

#> Estimated Complexity Parameter (for prunning): 0.09396

#> Fitting a 2D variogram...

#> Saving an object of class 'gstatModel'...or a random forest model:

omm4 <- fit.gstatModel(meuse, om~dist+soil, meuse.grid, method="quantregForest")

#> Fitting a Quantile Regression Forest model...

#> Fitting a 2D variogram...

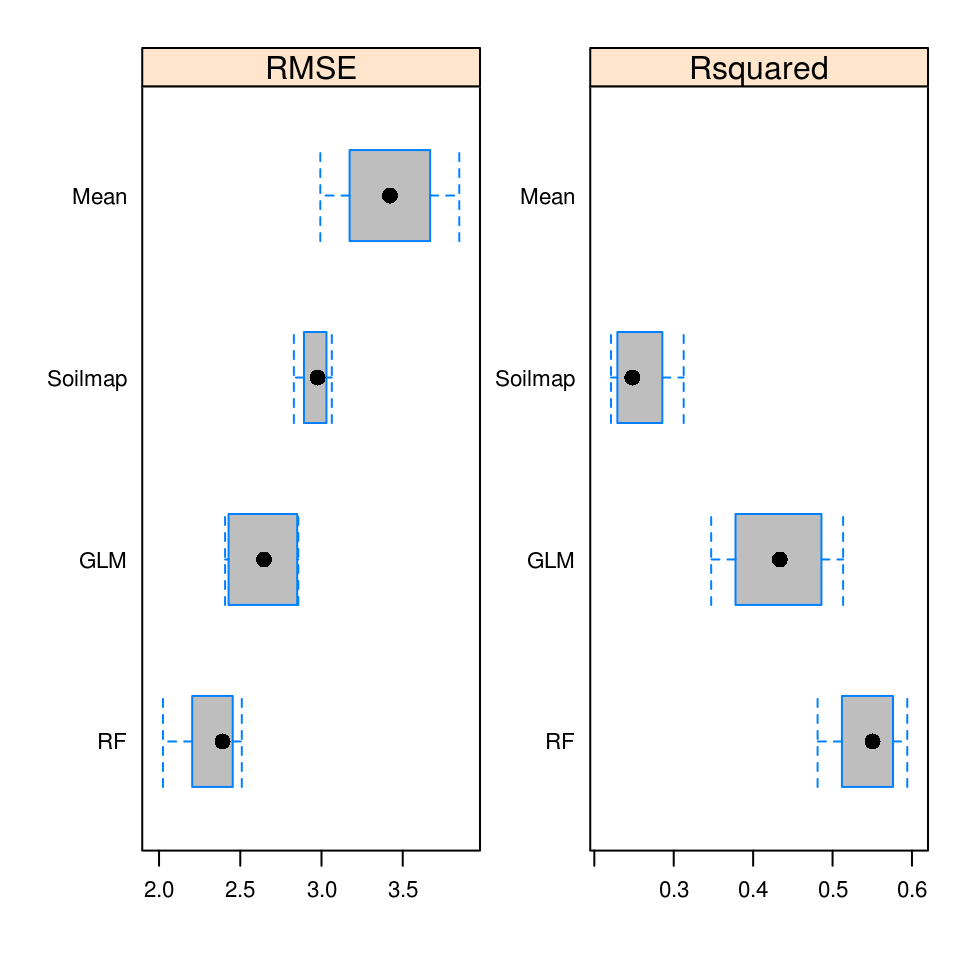

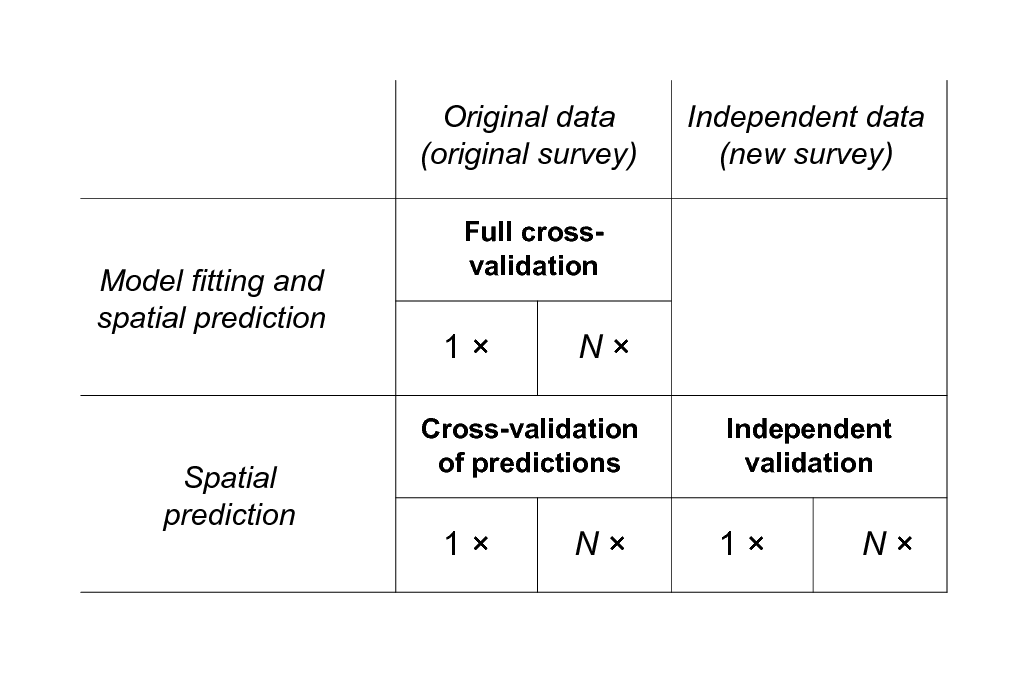

#> Saving an object of class 'gstatModel'...All regression-kriging models listed above are valid and the differences between their respective results are not likely to be large (Fig. 5.8). Regression tree combined with kriging (rpart-kriging) seems to produce slightly better results i.e. smallest cross-validation error, although the difference between the four prediction methods is, in fact, not large (±5% of variance explained). It is important to run such comparisons nevertheless, as they allow us to objectively select the most efficient method.

Figure 5.9: Predictions of the organic carbon (log-transformed values) using random forest vs linear regression-kriging. The random forest-kriging variance has been derived using the quantregForest package.

Fig. 5.9 shows the RK variance derived for the random forest model using the quantregForest package (Meinshausen 2006) and the formula in Eq.(5.21). Note that the quantregForest package estimates a much larger prediction variance than simple linear RK for large parts of the study area.

5.2.10 Regression-kriging and polygon averaging

Although many soil mappers may not realize it, many simpler regression-based techniques can be viewed as a special case of RK, or its variants. Consider for example a technique commonly used to generate predictions of soil properties from polygon maps: weighted averaging. Here the principal covariate available is a polygon map (showing the distribution of mapping units). In this model it is assumed that the trend is constant within mapping units and that the stochastic residual is spatially uncorrelated. In that case, the Best Linear Unbiased Predictor of the values is simple averaging of soil properties per unit (Webster and Oliver 2001, 43):

\[\begin{equation} \hat z({{s}}_0 ) = \bar \mu _p = \frac{1}{{n_p }}\sum\limits_{i = 1}^{n_p } {z({{s}}_i )} \tag{5.22} \end{equation}\]The output map produced by polygon averaging will exhibit abrupt changes at boundaries between polygon units. The prediction variance of this area-class prediction model is simply the sum of the within-unit variance and the estimation variance of the unit mean:

\[\begin{equation} \hat \sigma^2 ({{s}}_0 ) = \left( 1 + \frac{1}{n_p } \right) \cdot \sigma _p^2 \tag{5.23} \end{equation}\]From Eq.(5.23), it is evident that the accuracy of the prediction under this model depends on the degree of within-unit variation. The approach is advantageous if the within-unit variation is small compared to the between-unit variation. The predictions under this model can also be expressed as:

\[\begin{equation} \hat z({{s}}_0 ) = \sum\limits_{i = 1}^n {w_i \cdot z({{s}}_i)}; \qquad w_i = \left\{ {\begin{array}{*{20}c} {1/n_p } & {{\rm for} \; {{s}}_i \in p} \\ 0 & {{\rm otherwise}} \\ \end{array} } \right. \end{equation}\]where \(p\) is the unit identifier. So, in fact, weighted averaging per unit is a special version of regression-kriging where spatial autocorrelation is ignored (assumed zero) and all covariates are categorical variables.

Going back to the Meuse data set, we can fit a regression model for organic matter using soil types as predictors, which gives:

omm <- fit.gstatModel(meuse, log1p(om)~soil-1, meuse.grid)

#> Fitting a linear model...

#> Fitting a 2D variogram...

#> Saving an object of class 'gstatModel'...

summary(omm@regModel)

#>

#> Call:

#> glm(formula = log1p(om) ~ soil - 1, family = fit.family, data = rmatrix)

#>

#> Deviance Residuals:

#> Min 1Q Median 3Q Max

#> -1.0297 -0.2087 -0.0044 0.2098 0.6668

#>

#> Coefficients:

#> Estimate Std. Error t value Pr(>|t|)

#> soil1 2.2236 0.0354 62.9 <2e-16 ***

#> soil2 1.7217 0.0525 32.8 <2e-16 ***

#> soil3 1.9293 0.1006 19.2 <2e-16 ***

#> ---

#> Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

#>

#> (Dispersion parameter for gaussian family taken to be 0.12)

#>

#> Null deviance: 672.901 on 153 degrees of freedom

#> Residual deviance: 18.214 on 150 degrees of freedom

#> (2 observations deleted due to missingness)

#> AIC: 116.6

#>

#> Number of Fisher Scoring iterations: 2and these regression coefficients for soil classes 1, 2, 3 are

equal to the mean values per class:

aggregate(log1p(om) ~ soil, meuse, mean)

#> soil log1p(om)

#> 1 1 2.2

#> 2 2 1.7

#> 3 3 1.9Note that this equality can be observed only if we remove the intercept from the regression model, hence we use:

log1p(om) ~ soil-1and NOT:

log1p(om) ~ soilThe RK model can also be extended to fuzzy memberships, in which case \({\rm{MU}}\) values are binary variables with continuous values in the range 0–1. Hence also the SOLIM model Eq.(5.9) is in fact just a special version of regression on mapping units:

\[\begin{equation} \hat z({{s}}_0 ) = \sum\limits_{c_j = 1}^{c_p} {\nu _{c_j} ({{s}}_0) \cdot z_{c_j} } = \sum\limits_{j = 1}^p { {\rm{MU}}_j \cdot \hat b_j} \hspace{.5cm} {\rm {for}} \hspace{.5cm} z_{c_j} = \frac{1}{{n_p }}\sum\limits_{i = 1}^{n_p } {z({{s}}_i )} \tag{5.24} \end{equation}\]where \({\rm{MU}}\) is the mapping unit or soil type, \(z_{c_j}\) is the modal (or most representative) value of some soil property \(z\) for the \(c_j\) class, and \(n_p\) is total number of points in some mapping unit \({\rm{MU}}\).

Ultimately, spatially weighted averaging of values per mapping unit, different types of regression, and regression kriging are all, in principle, different variants of the same statistical method. The differences are related to whether only categorical or both categorical and continuous covariates are used and whether the stochastic residual is spatially correlated or not. Although there are different ways to implement combined deterministic/stochastic predictions, one should not treat these nominally equivalent techniques as highly different.

5.2.11 Predictions at point vs block support

The geostatistical model refers to a soil variable that is defined by

the type of property and how it is measured (e.g. soil pH (KCl), soil pH

(H\(_2\)O), clay content, soil organic carbon measured with spectroscopy),

but also to the size and orientation of the soil samples that were taken

from the field. This is important because the spatial variation of the

dependent variable strongly depends on the support size (e.g. due to an

averaging out effect, the average organic content of bulked samples taken

from 1 ha plots typically has less spatial variation than that of single

soil samples taken from squares). This implies that observations at

different supports cannot be merged without taking this effect into

account (Webster and Oliver 2001). When making spatial predictions using

kriging one can use block-kriging (Webster and Oliver 2001) or

area-to-point kriging (Kyriakidis 2004) to make predictions at

larger or smaller supports. Both block-kriging and area-to-point kriging

are implemented in the gstat package via the generic function krige (Pebesma 2004).

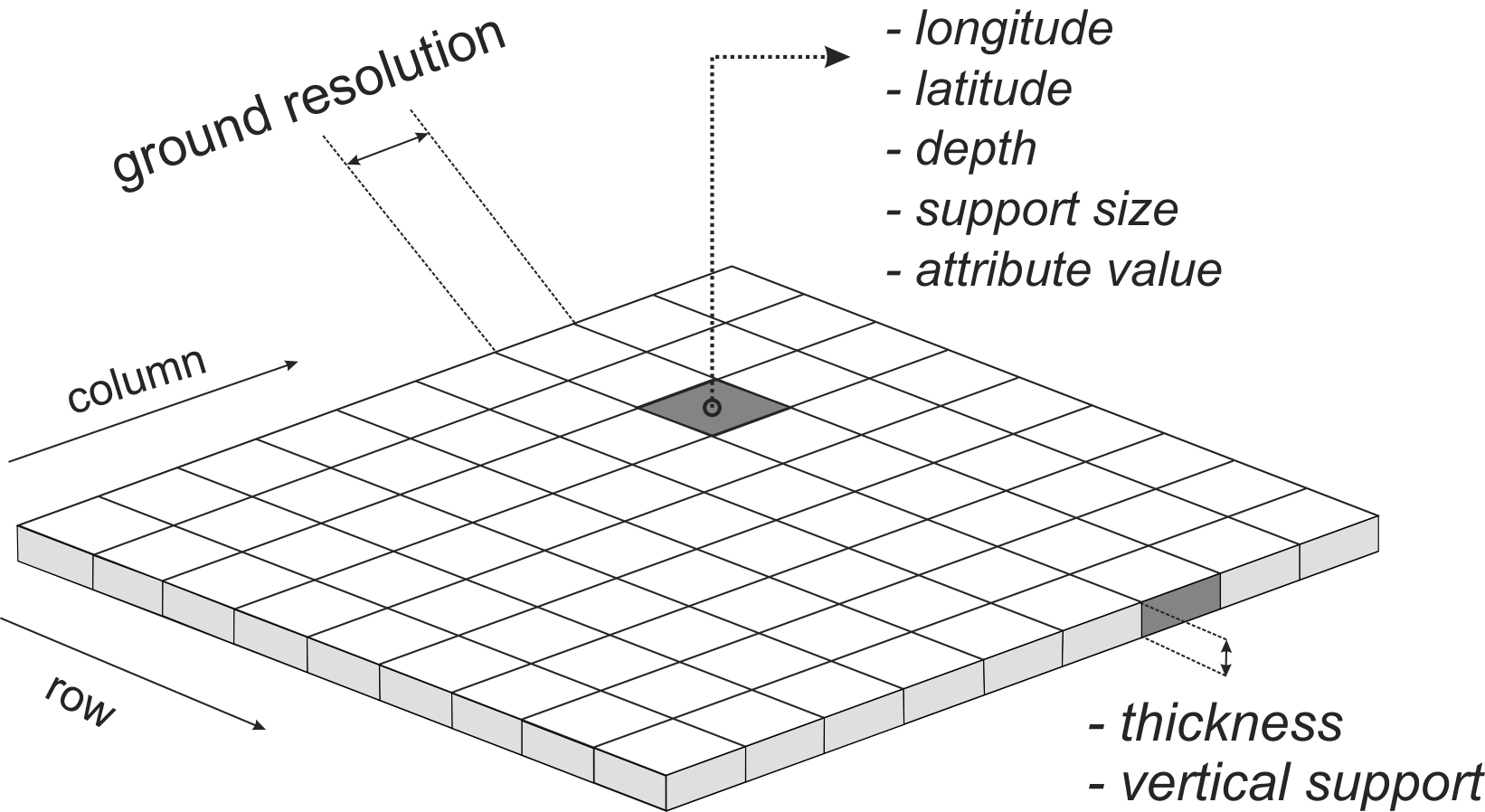

Support can be defined as the integration volume or aggregation level at which an observation is taken or for which an estimate or prediction is given. Support is often used in the literature as a synonym for scale — large support can be related to coarse or general scales and vice versa (Hengl 2006). The notion of support is important to characterize and relate different scales of soil variation (Schabenberger and Gotway 2005). Any research of soil properties is made with specific support and spatial spacing, the latter being the distance between sampling locations. If properties are to be used with different support, e.g. when model inputs require a different support than the support of the observations, scaling (aggregation or disaggregation) becomes necessary (Heuvelink and Pebesma 1999).

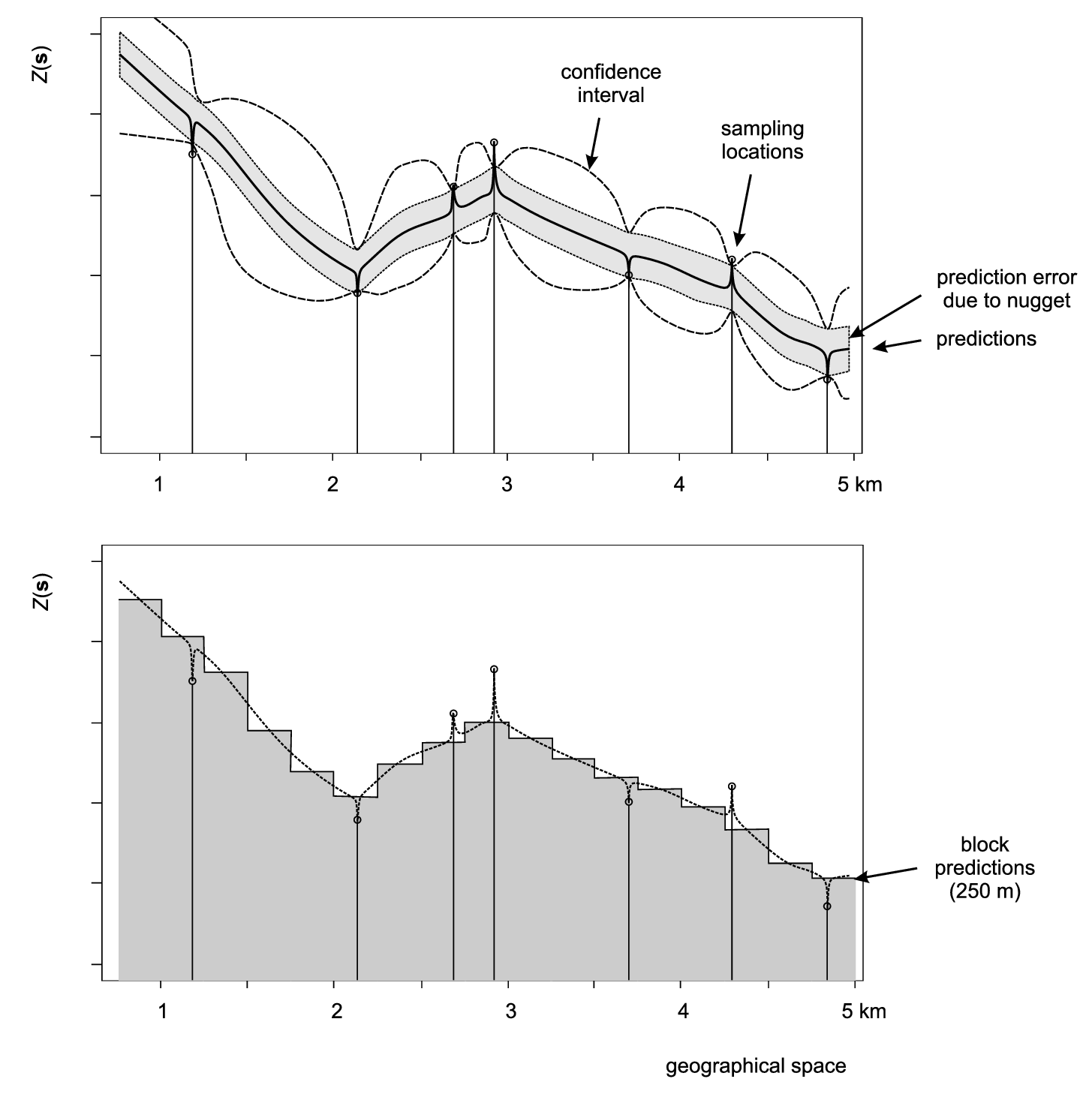

Figure 5.10: Scheme with predictions on point (above) and block support (below). In the case of various versions of kriging, both point and block predictions smooth the original measurements proportionally to the nugget variation. After Goovaerts (1997).

Depending on how significant the nugget variation is, prediction variance estimated by a model can be significantly reduced by increasing the support from points to blocks. The block kriging variance is smaller than the point kriging variance for an amount approximately equal to the nugget variation. Even if we take a block size of a few meters this decreases the prediction error significantly, if indeed the nugget variation occurs within a few meters. Because, by definition, many kriging-type techniques smooth original sampled values, one can easily notice that for support sizes smaller than half of the average shortest distance between the sampling locations, both point and block predictions might lead to practically the same predictions (see some examples by Goovaerts (1997, 158), Heuvelink and Pebesma (1999) and/or Hengl (2006)).

Consider, for example, point and block predictions and simulations using the estimates of organic matter content in the topsoil (in dg/kg) for the Meuse case study. We first generate predictions and simulations on point support:

omm <- fit.gstatModel(meuse, log1p(om)~dist+soil, meuse.grid)

#> Fitting a linear model...

#> Fitting a 2D variogram...

#> Saving an object of class 'gstatModel'...

om.rk.p <- predict(omm, meuse.grid, block=c(0,0))

#> Subsetting observations to fit the prediction domain in 2D...

#> Generating predictions using the trend model (RK method)...

#> [using ordinary kriging]

#>

100% done

#> Running 5-fold cross validation using 'krige.cv'...

#> Creating an object of class "SpatialPredictions"

om.rksim.p <- predict(omm, meuse.grid, nsim=20, block=c(0,0))

#> Subsetting observations to fit the prediction domain in 2D...

#> Generating 20 conditional simulations using the trend model (RK method)...

#> drawing 20 GLS realisations of beta...

#> [using conditional Gaussian simulation]

#>

100% done

#> Creating an object of class "RasterBrickSimulations"

#> Loading required package: raster

#>

#> Attaching package: 'raster'

#> The following object is masked from 'package:nlme':

#>

#> getData

#> The following objects are masked from 'package:aqp':

#>

#> metadata, metadata<-where the argument block defines the support size for the predictions

(in this case points). To produce predictions on block support for

square blocks of 40 by 40 m we run:

om.rk.b <- predict(omm, meuse.grid, block=c(40,40), nfold=0)

#> Subsetting observations to fit the prediction domain in 2D...

#> Generating predictions using the trend model (RK method)...

#> [using ordinary kriging]

#>

100% done

#> Creating an object of class "SpatialPredictions"

om.rksim.b <- predict(omm, meuse.grid, nsim=2, block=c(40,40), debug.level=0)

#> Subsetting observations to fit the prediction domain in 2D...

#> Generating 2 conditional simulations using the trend model (RK method)...

#> Creating an object of class "RasterBrickSimulations"

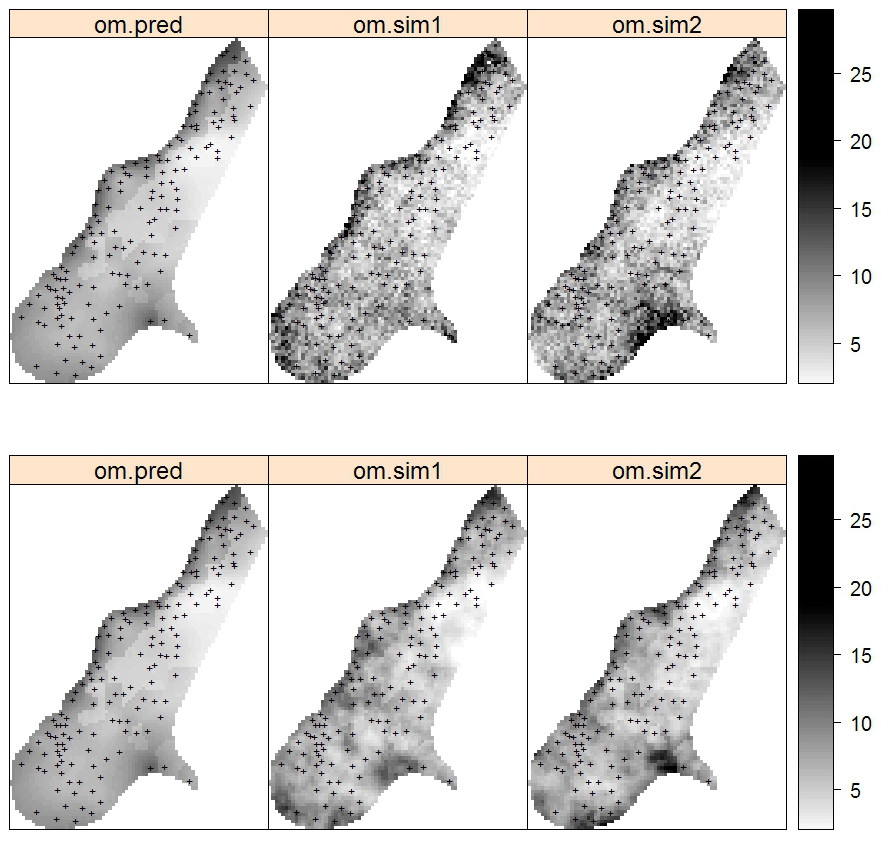

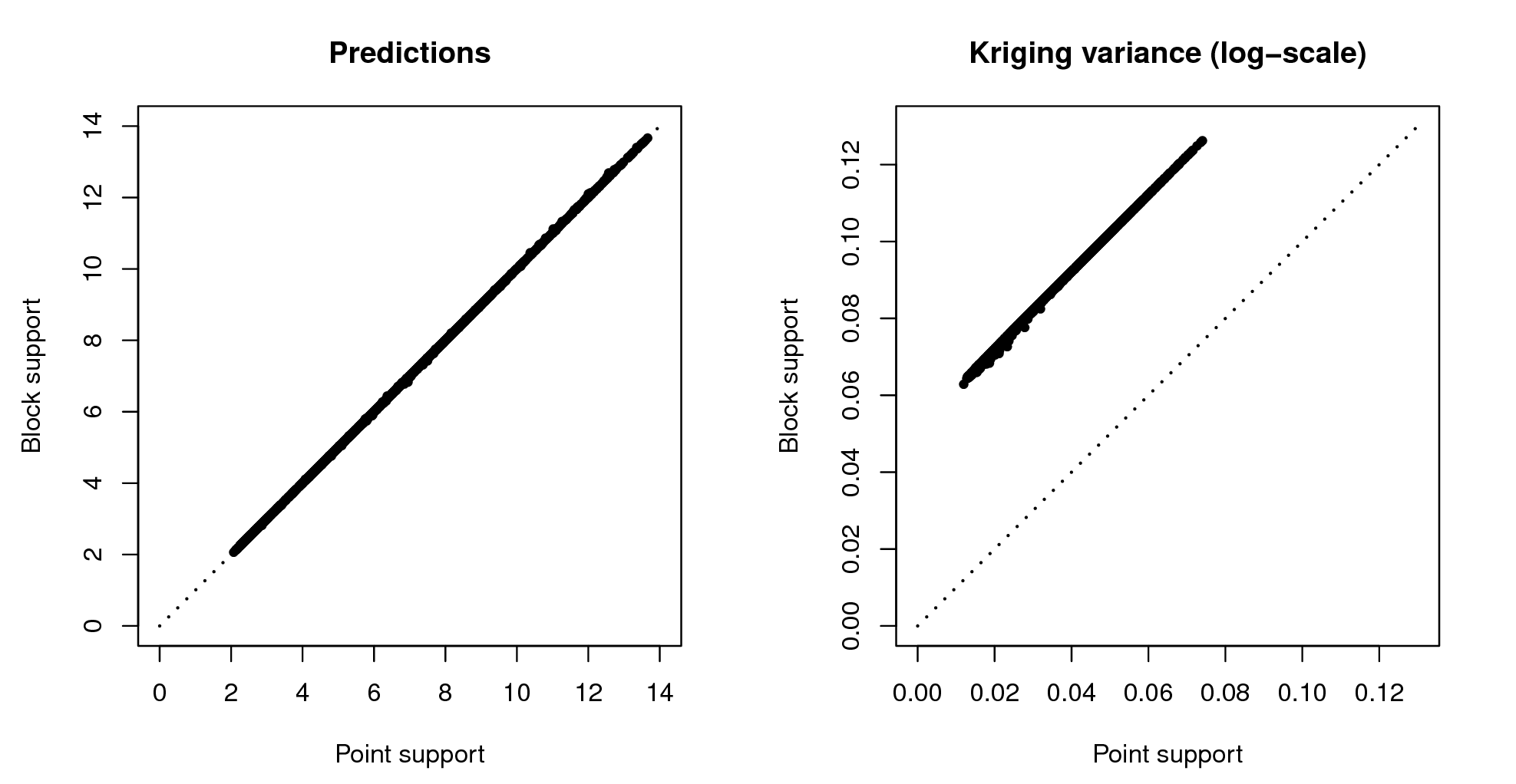

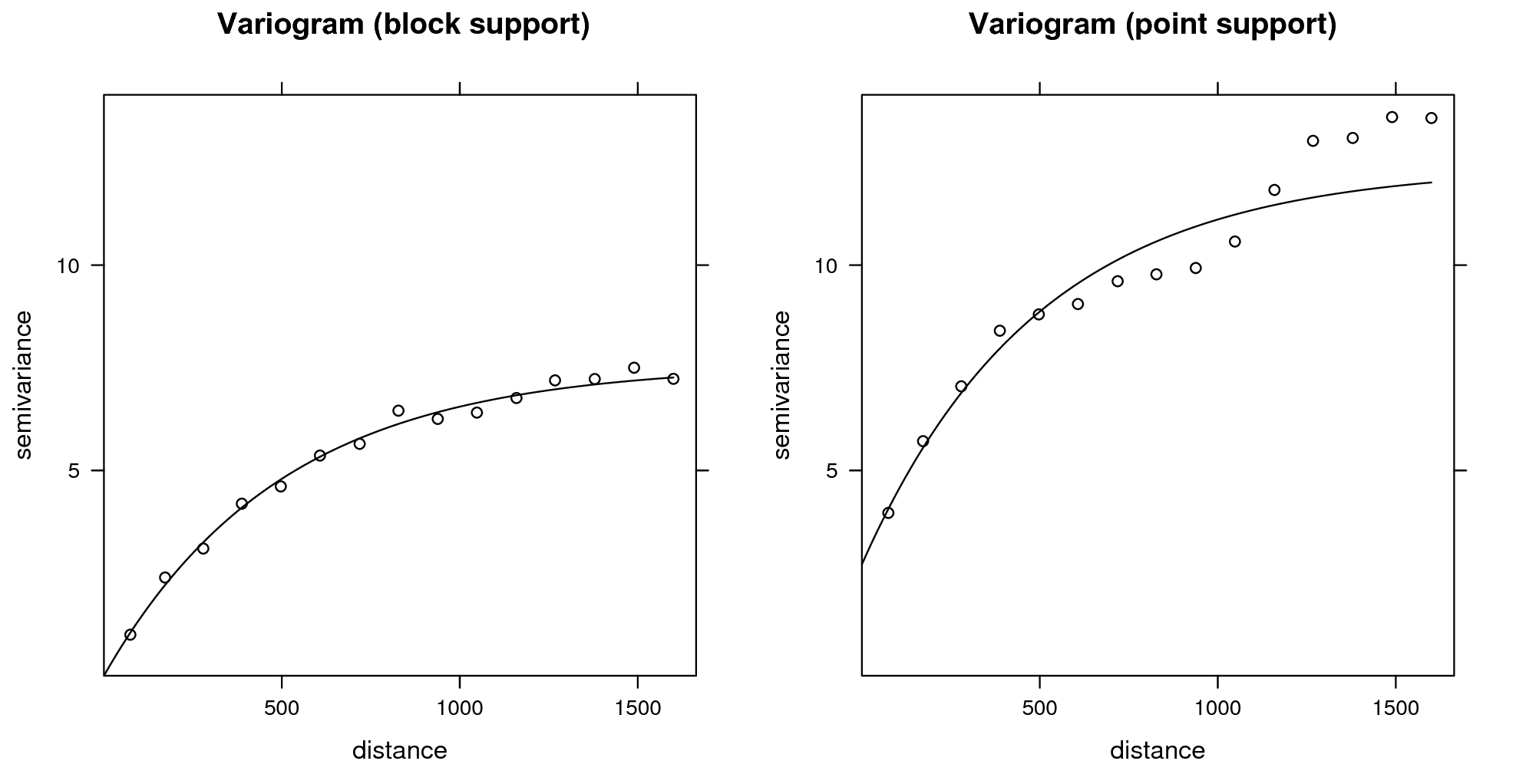

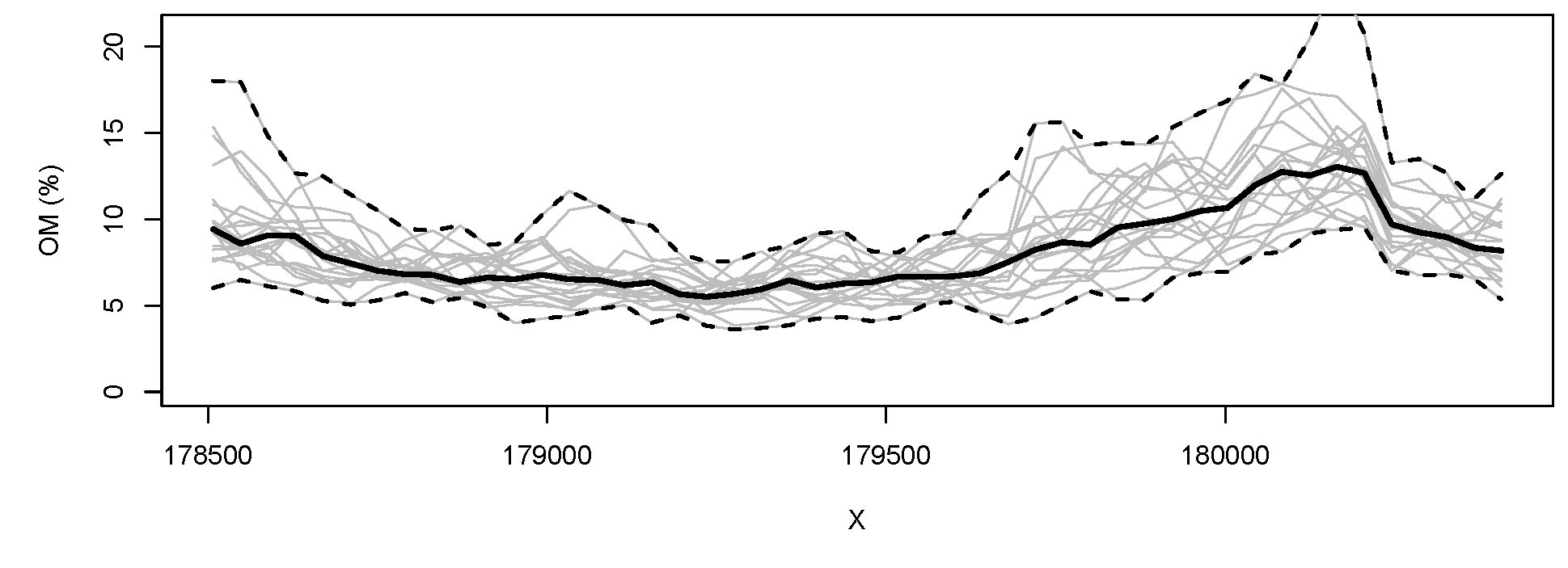

## computationally intensiveVisual comparison confirms that the point and block kriging prediction maps are quite similar, while the block kriging variance is much smaller than the point kriging variance (Fig. 5.11).

Even though block kriging variances are smaller than point kriging

variances this does not imply that block kriging should always be

preferred over point kriging. If the user interest is in point values

rather than block averages, point kriging should be used. Block kriging

is also computationally more demanding than point kriging. Note also

that it is more difficult (read: more expensive) to validate block

kriging maps. In the case of point predictions, maps can be validated to

some degree using cross-validation, which is inexpensive. For example,

via one can estimate the cross-validation error using the krige.cv

function. The gstat package reports automatically the cross-validation error

(Hengl, Nikolić, and MacMillan 2013):

om.rk.p

#> Variable : om

#> Minium value : 1

#> Maximum value : 17

#> Size : 153

#> Total area : 4964800

#> Total area (units) : square-m

#> Resolution (x) : 40

#> Resolution (y) : 40

#> Resolution (units) : m

#> GLM call formula : log1p(om) ~ dist + soil

#> Family : gaussian

#> Link function : identity

#> Vgm model : Exp

#> Nugget (residual) : 0.048

#> Sill (residual) : 0.065

#> Range (residual) : 285

#> RMSE (validation) : 2.5

#> Var explained : 47.3%

#> Effective bytes : 313

#> Compression method : gzip

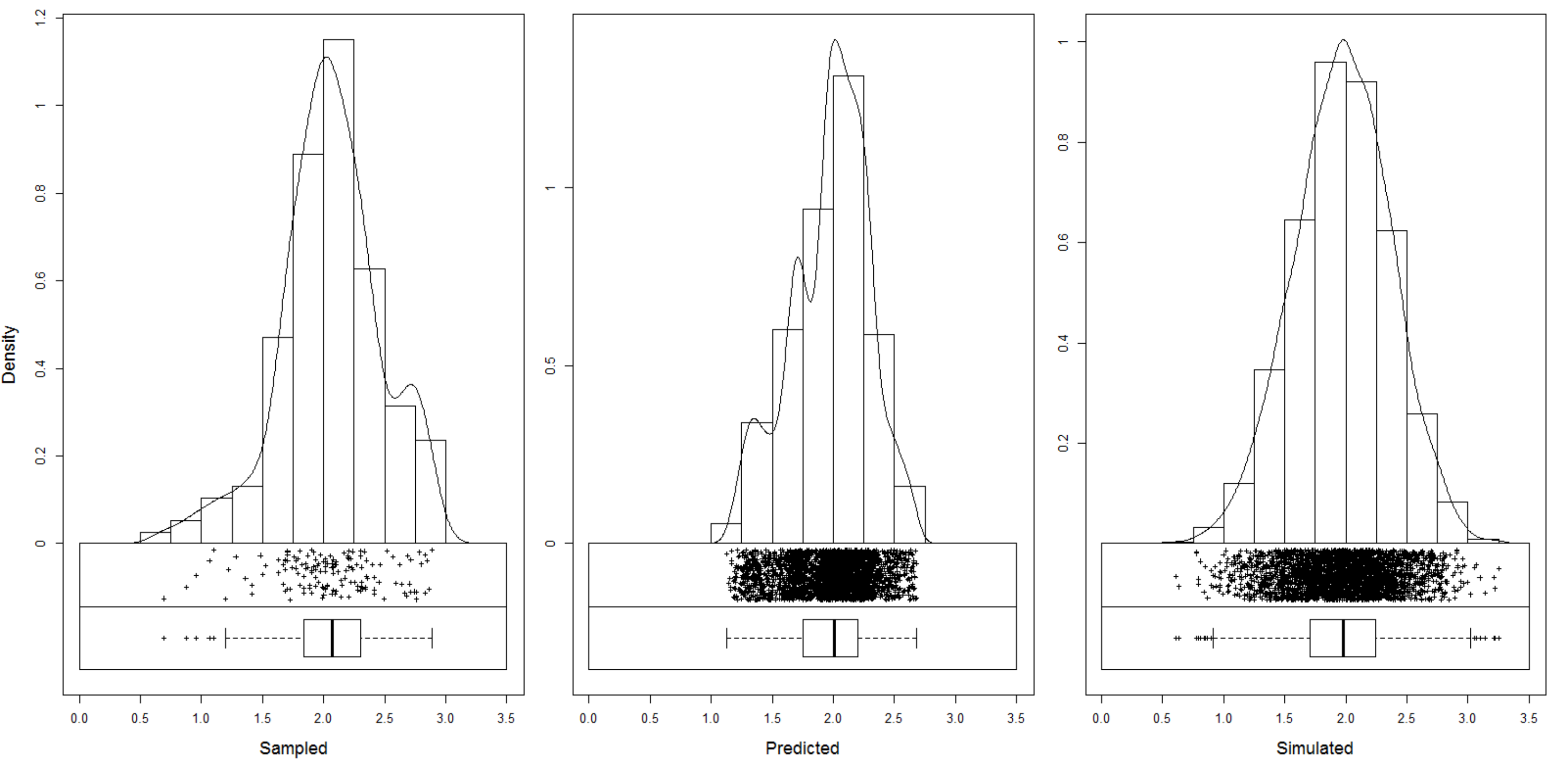

Figure 5.11: Predictions and simulations (2) at point (above) and block (below) support using the Meuse dataset. Note that prediction values produced by point and block methods are quite similar. Simulations on block support produce smoother maps than the point-support simulations.

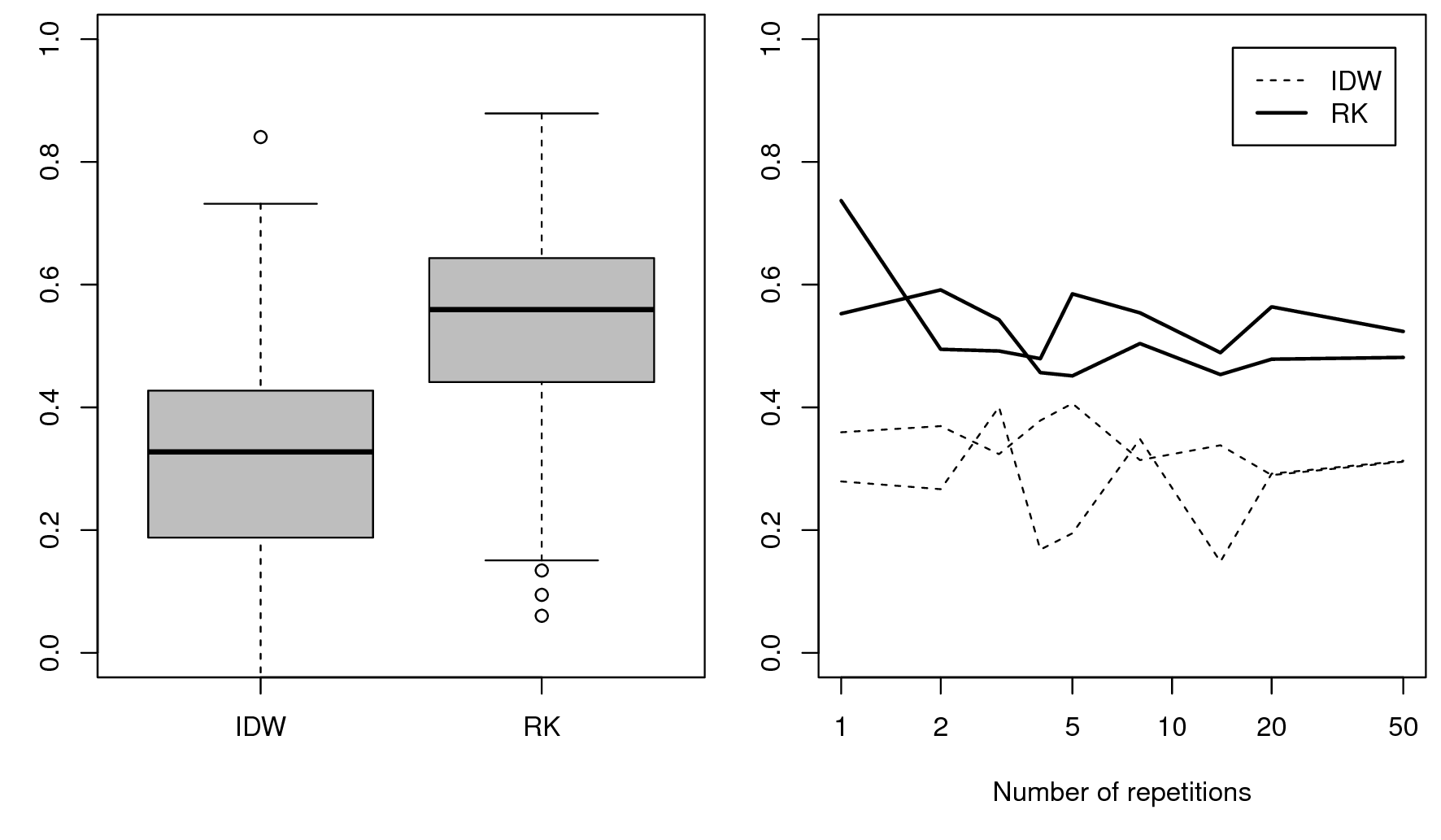

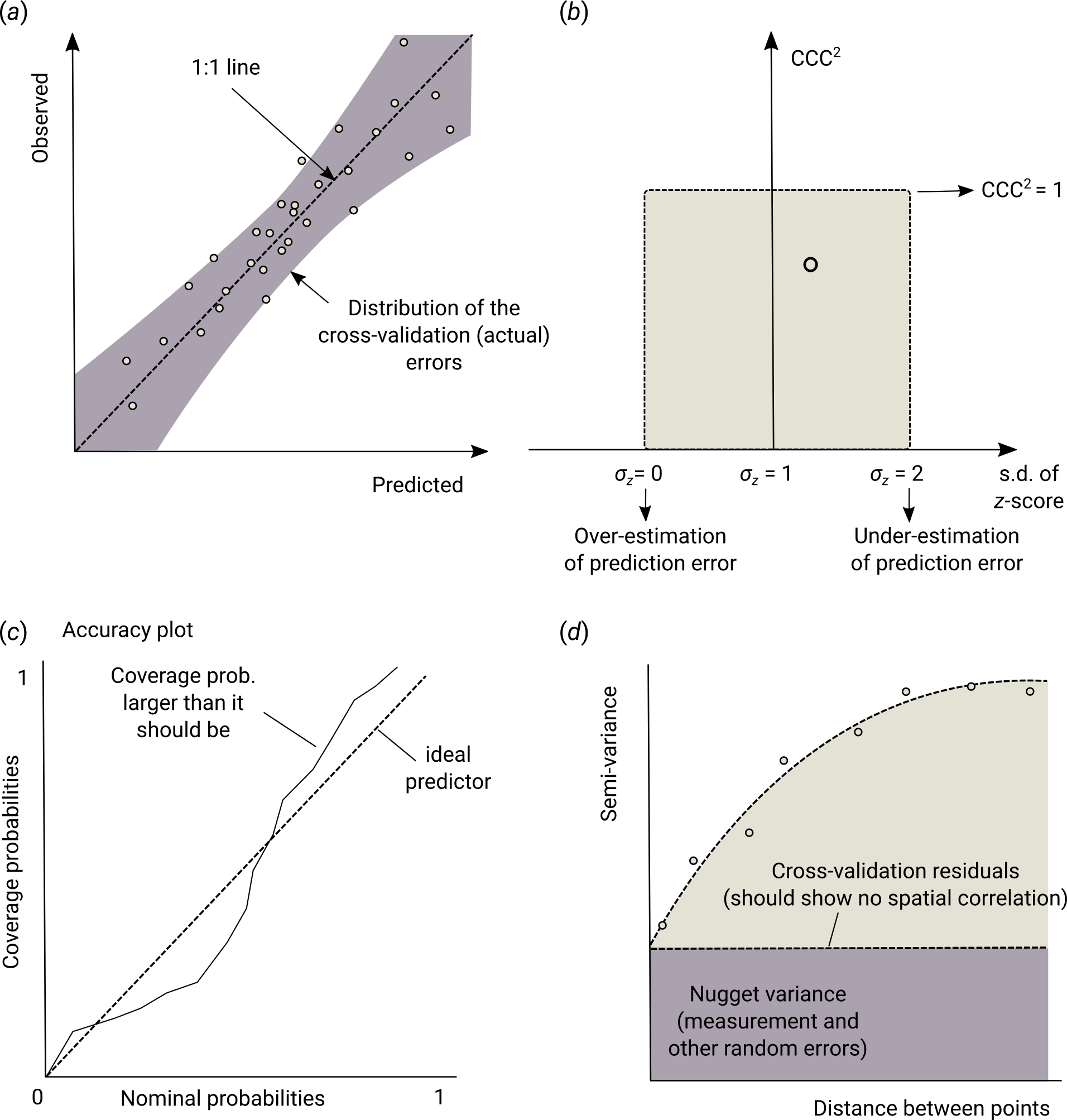

which shows that the mapping accuracy at point support is ca. 53% of the original variance (see further Eq.(5.31)).